⬤ Microsoft just hit a massive compute milestone at its Fairwater Atlanta facility. Epoch AI confirms the data center can now handle more than 20 GPT-4-scale model training runs every month, making it the largest AI training hub currently operational. This throughput jump shows how seriously Microsoft is scaling its infrastructure to support faster experimentation and development cycles. Capacity projections point toward continued expansion well into 2026.

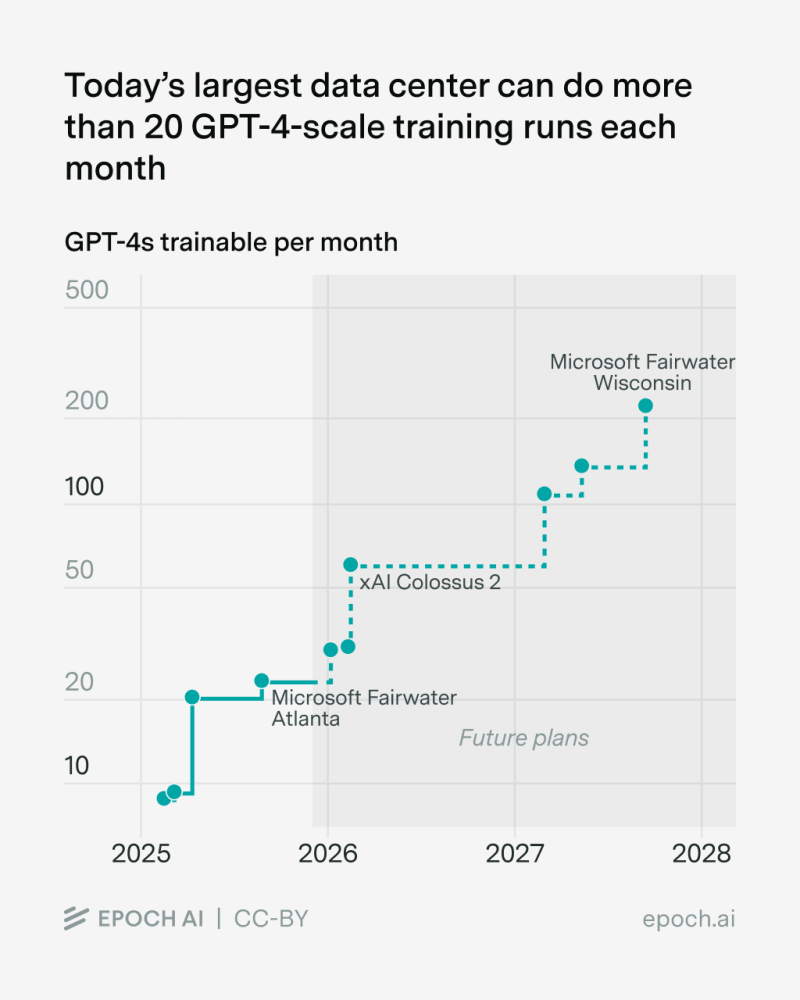

⬤ Epoch AI's data shows GPT-4-equivalent training capacity climbing steadily, with Fairwater Atlanta already crossing the 20-model threshold as we move through 2025. Upcoming expansions like Microsoft Fairwater Wisconsin and xAI's Colossus 2 could push monthly capacity into the hundreds by 2027. For MSFT, running 20+ large-scale training sessions monthly means shorter development cycles, more frequent model iterations, and stronger positioning in the AI research community.

⬤ The ability to run this many GPT-4-level experiments represents a fundamental shift in AI development economics. Training one GPT-4-scale model demands enormous computational resources, so jumping from handful-per-month capacity to 20+ runs changes the game entirely. Microsoft's Fairwater Atlanta investment gives the company room to handle growing enterprise AI demand while keeping pace with accelerating competition from other cloud providers and AI labs.

⬤ This infrastructure buildout signals deeper changes in how major tech companies approach AI development. More compute means faster performance improvements, tighter competition among cloud platforms, and shifting capital allocation strategies across the sector. As MSFT ramps up training capacity, market focus will increasingly turn toward development speed, model quality improvements, and how well companies can scale their AI infrastructure to meet demand.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah