⬤ Baidu Inc. has officially launched ERNIE 5.0, rolling out a new generation AI model with native omni-modal capabilities. Built on an end-to-end architecture, ERNIE 5.0 handles unified multimodal understanding and generation—processing text, images, audio, and video within one framework. The model is now live on the ERNIE Bot website and available through Baidu AI Cloud's Qianfan Model Platform for enterprise and developer use.

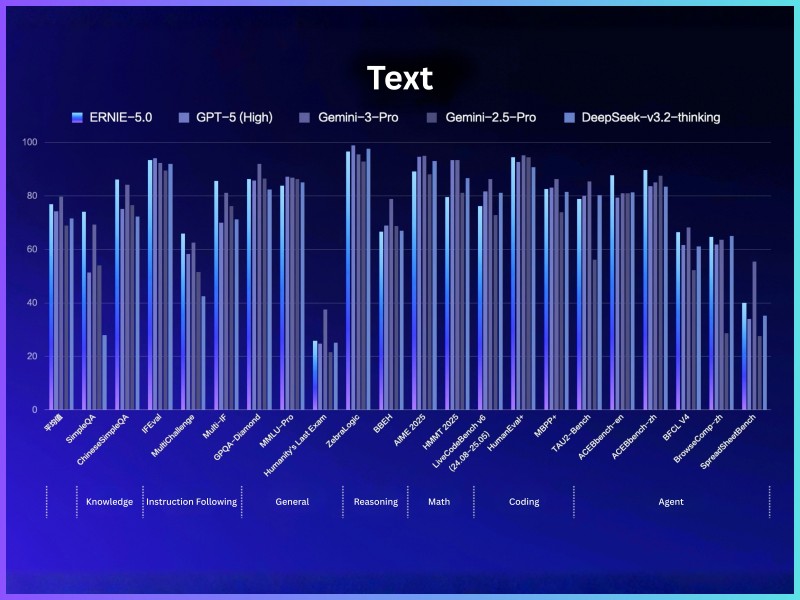

⬤ Performance data shows ERNIE 5.0 delivering strong results across text-based benchmarks including knowledge tasks, instruction following, general reasoning, mathematics, coding, and agent operations. The model performs competitively against other advanced systems across multiple evaluation categories, showing balanced capability rather than narrow specialization in any single domain.

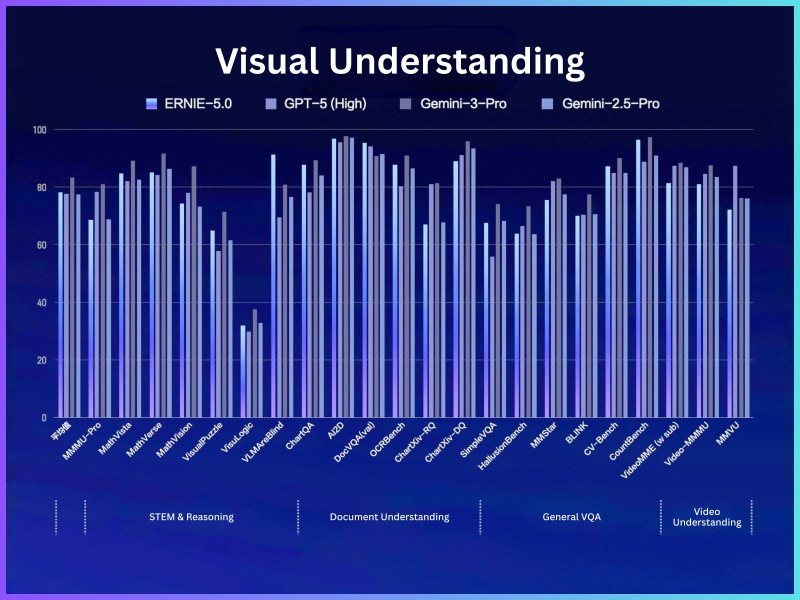

⬤ Visual benchmark results demonstrate ERNIE 5.0's solid performance in STEM reasoning, document understanding, general visual question answering, and video comprehension. The model shows consistent strength across different types of visual tasks, indicating robust image and video processing capabilities that complement its text-based functions.

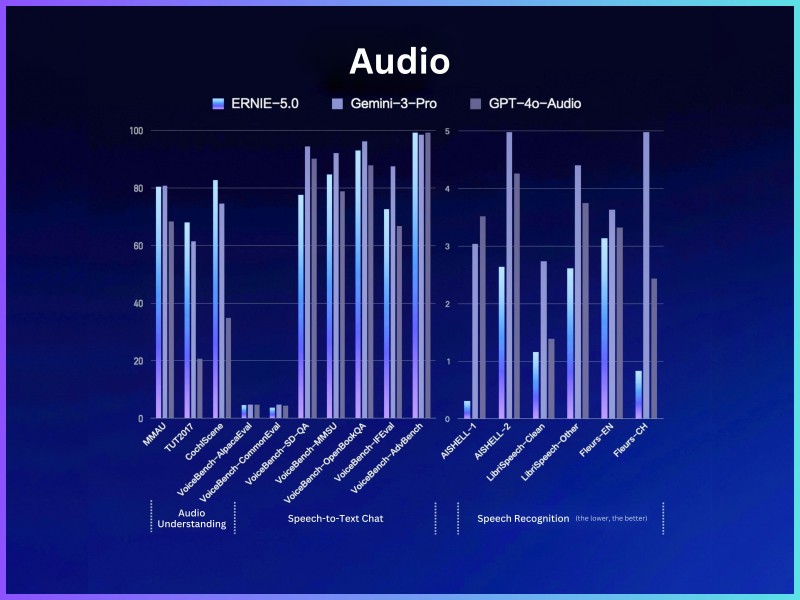

⬤ Audio-focused evaluations highlight strong performance in audio understanding, speech-to-text chat, and speech recognition tasks. The model uses a 2.4 trillion-parameter mixture-of-experts architecture with less than 3% of parameters activated per inference, balancing reasoning power with computational efficiency. This design allows ERNIE 5.0 to maintain high performance while keeping resource usage relatively low during operation.

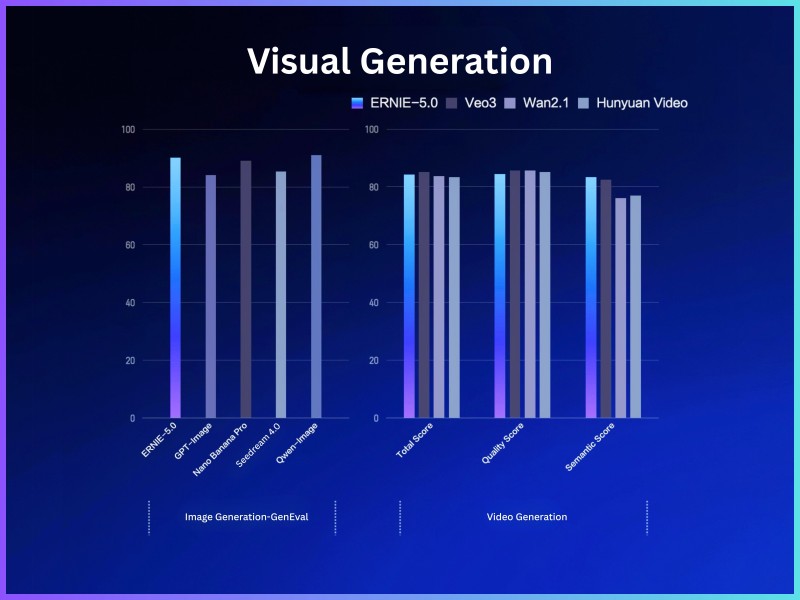

⬤ The ERNIE 5.0 release marks Baidu's push into scalable multimodal AI as competition heats up among large model providers. By focusing on unified multimodal processing and efficient inference, Baidu positions ERNIE 5.0 as a platform-ready solution for diverse real-world applications, reflecting the industry's shift toward AI systems that handle multiple data types while staying operationally lean.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi