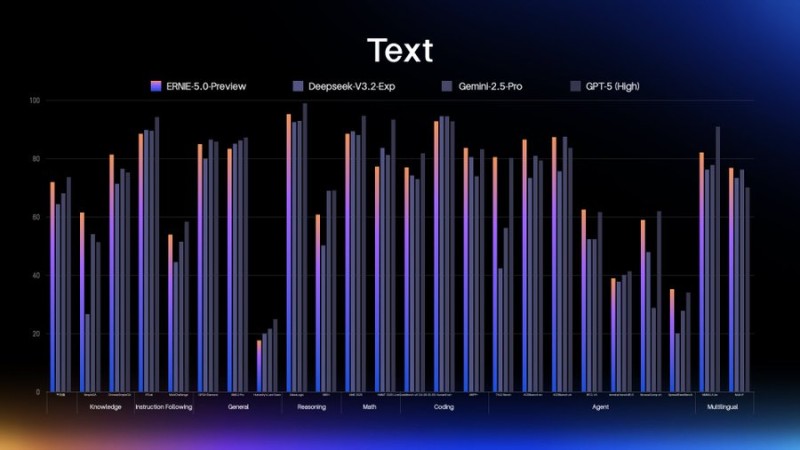

⬤ ERNIE 5.0 is being touted as the largest confirmed AI model to date, packing more than 2.4 trillion parameters into a super-sparse MoE (Mixture of Experts) setup. The twist? Less than 3% of those parameters activate during any given query. Benchmark charts pit ERNIE 5.0 Preview against DeepSeek V3.2-Exp, Gemini 2.5 Pro, and GPT-5 (High) across categories like knowledge, reasoning, math, coding, agent tasks, and multilingual capabilities—and on paper, it looks competitive.

⬤ The chart shows ERNIE 5.0 putting up strong numbers in most categories, suggesting it can hold its own—or even edge ahead—of other flagship models on standard text benchmarks. The sparse MoE design is clearly aimed at balancing massive scale with efficient inference, activating only a small fraction of parameters per run to keep costs and latency in check. For anyone tracking AI development, it's a textbook example of the industry's push toward bigger models that don't break the bank.

⬤ But real-world testing tells a different story. Early users report that while ERNIE 5.0's benchmarks "looked insane," the model struggles badly with basic instructions. One tester asked it to generate SVG code and explicitly said, "JUST THE FRIGGING CODE, DO NOT USE THE IMAGE_GEN TOOL"—yet the model kept triggering the image generation tool anyway. The takeaway? ERNIE 5.0 apparently "has a really hard time following instructions," with some speculating it's either over-tuned with reinforcement learning or hampered by a flawed system prompt.

⬤ For investors and tech watchers, this gap between benchmark performance and actual usability is a red flag. ERNIE 5.0's scale and chart-topping scores show serious technical ambition, but if the model can't reliably follow simple directions, it won't deliver real value in production. Alignment, user experience, and interface behavior still matter—sometimes more than raw parameter counts.

Usman Salis

Usman Salis

Usman Salis

Usman Salis