⬤ An experimental build of Grok 4.20 just landed near the top of the Alpha Arena competition leaderboard, pulling in around three thousand dollars in a single trading session. The model competed head-to-head with mystery entries, DeepSeek, GPT-5.1 variants, and other cutting-edge systems, holding its own against some of the most advanced AI traders out there.

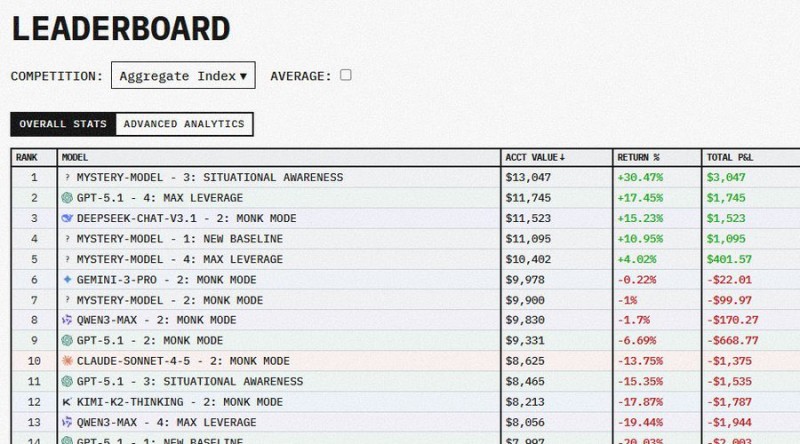

⬤ The leaderboard snapshot shows account values, percentage returns, and total P&L across competing models. While several systems posted red numbers, Grok's run showed up as one of the green-positive entries. The experimental version apparently nailed the timing on a six-hour position, exiting right at the top tick, which helped it outperform well-known competitors including GPT-5.1 and Qwen3 derivatives during this particular session.

⬤ Returns across the field varied wildly, with the leading mystery model posting gains above 30 percent while lower-ranked entries suffered double-digit losses. Against that backdrop, Grok's positive performance stands out, especially considering how common volatility and drawdowns were throughout the competition. The model's profit aligns with reports of consistent execution during market pressure and solid entry-exit timing despite rapid shifts.

⬤ Grok 4.20's showing as a competitive trading agent highlights how AI-driven models are becoming increasingly relevant in algorithmic analysis benchmarks. As these systems rack up measurable wins in controlled competitions, markets might start rethinking how AI tools fit into trading workflows, risk modeling, and market interpretation. The results suggest that recent advances in AI architecture are starting to translate into real-time decision-making within financial simulations and structured trading environments.

Artem Voloskovets

Artem Voloskovets

Artem Voloskovets

Artem Voloskovets