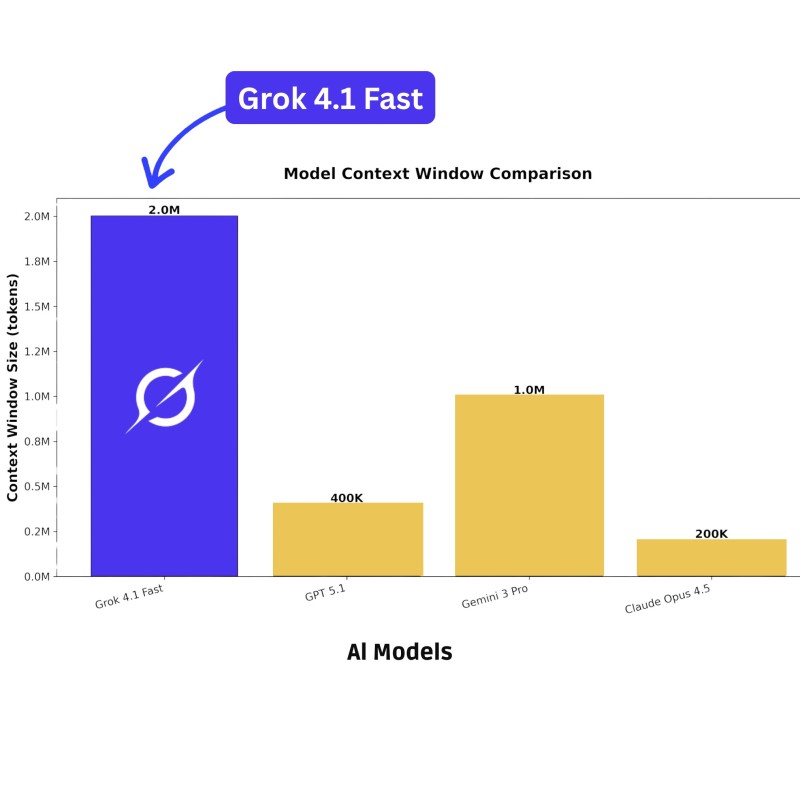

⬤ Grok 4.1 just rolled out with a game-changing feature: a massive 2-million-token context window that leaves every other AI model in the dust. This expanded capacity means developers can now feed the system complete codebases, marathon conversations, and entire document libraries without breaking them into chunks. When you stack it against the competition—GPT 5.1's 400,000 tokens, Gemini 3 Pro's 1 million, and Claude Opus 4.5's 200,000—Grok 4.1 stands twice as tall as its closest rival.

⬤ The secret behind this breakthrough lies in long-horizon reinforcement learning designed specifically for multi-turn interactions. This training method keeps the model sharp and coherent across the full 2-million-token span, maintaining consistent reasoning whether you're at token one or token two million. That stability opens doors to workflows that were previously impossible—think reviewing dozens of code files simultaneously or analyzing comprehensive reports without splitting them up.

⬤ This isn't just an incremental upgrade. While competing models max out between 200,000 and 1 million tokens, Grok 4.1 essentially doubles what's possible today. For developers building complex systems, researchers analyzing massive datasets, and enterprises automating sophisticated workflows, this context window expansion could reshape how they choose and deploy AI tools.

⬤ Context capacity has become the defining battleground for next-gen AI systems, and Grok 4.1 just planted its flag at the peak. By delivering the largest processing window available, it's not just competing—it's redefining what users can accomplish in a single AI session.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah