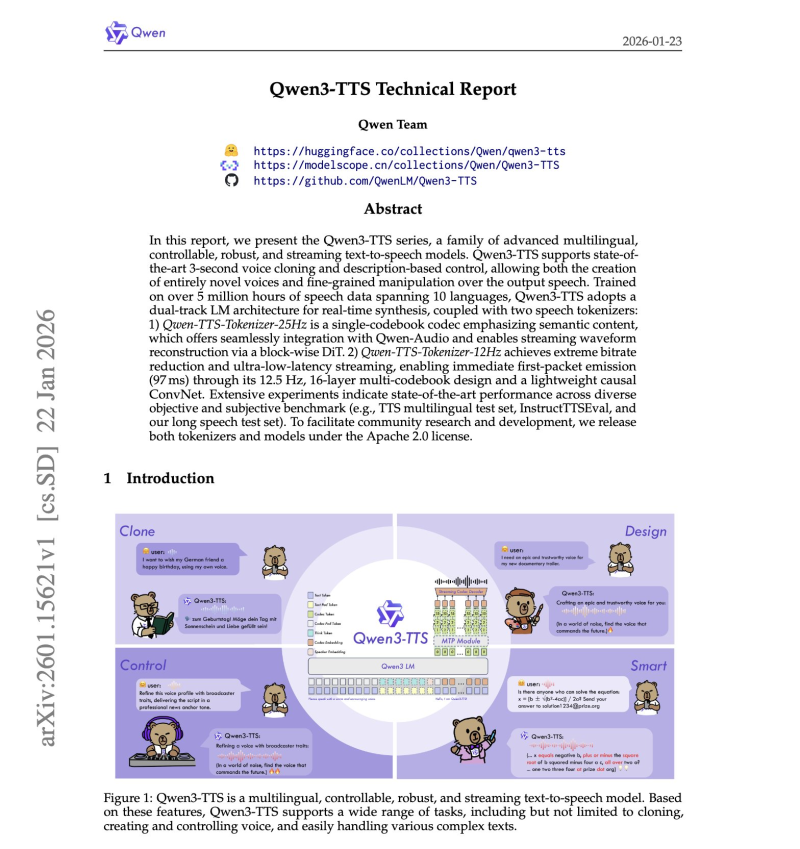

⬤ The Qwen Team has introduced Qwen3-TTS, a new open-source text-to-speech system. The release converts a Qwen3 language model into an instruction-steerable, real-time voice generation stack by jointly training a speaker encoder with a dual-track text and acoustic token backbone.

⬤ Qwen3-TTS supports real-time streaming, voice cloning, and fine-grained voice control through natural language descriptions. The system is trained on more than 5 million hours of speech data covering 10 languages. It uses a dual-track language model architecture optimized for low-latency generation, combined with two dedicated speech tokenizers—one prioritizing semantic content, the other focusing on bitrate reduction and streaming efficiency. The system can emit its first audio packet in approximately 97 milliseconds.

⬤ Internally, Qwen3-TTS applies hierarchical multi-token prediction across residual vector quantization codebooks, alongside a streaming decoding path based on sliding-window block attention. For audio generation, it employs a flow-matching diffusion transformer vocoder to produce mel spectrograms, which are then converted into waveforms using BigVGAN. Benchmark results indicate that Qwen3-TTS performs competitively on CosyVoice 3 and Seed-TTS evaluations while achieving top speaker similarity scores across all 10 languages in the multilingual test set.

⬤ This release is relevant for the broader AI landscape because open-source, low-latency text-to-speech systems expand access to advanced voice technology. Strong multilingual performance and real-time capabilities can support conversational agents, content creation tools, and interactive services. As demand grows for controllable and scalable AI voice solutions, developments like Qwen3-TTS may influence adoption trends and competition within the global speech synthesis ecosystem.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi