⬤ Essential AI rolled out Rnj-1 as an 8-billion-parameter model that comes in both base and instruction-tuned versions. The company built the system with a focus on scientific precision and computational efficiency, proving that smart architecture choices can compete with models trained using massive compute budgets. Essential AI positioned the release as part of a push to advance American open-source AI development "at par with the best in the world," making transparency and accessibility core to their approach.

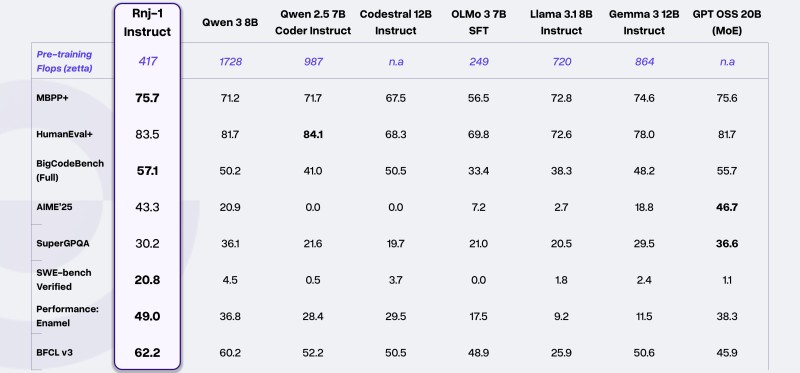

⬤ Rnj-1 delivers strong numbers across STEM, coding, and math benchmarks. The instruction model hits 75.7 on MBPP+, 83.5 on HumanEval+, and 57.1 on BigCodeBench, beating several larger systems including Llama 3.1 8B while staying competitive with Gemma 3 12B. On AIME'25, it scores 43.3, far ahead of most 7-12B models in the comparison set. The standout metric is a 20.8 percent result on SWE-bench Verified, where Rnj-1 outperforms Gemini 2.0 Flash and matches GPT-4o OSS 20B despite being considerably smaller. Trained on 8.7 trillion tokens using a mix of TPU and AMD GPU infrastructure, the model demonstrates how quality data and thoughtful design can beat raw scaling.

⬤ When stacked against peers like Qwen 3 8B, Qwen 2.5 7B Coder, Codestral 12B, OLMo 3 7B, Llama 3.1 8B Instruct, Gemma 3 12B, and GPT OSS 20B MoE, Rnj-1 consistently ranks at or near the top in core coding and reasoning benchmarks. While some models lead in specific categories—Qwen 2.5 7B Coder hits 84.1 on HumanEval+ and Gemma 3 12B scores 29.5 on SuperGPQA—Rnj-1 delivers the strongest overall performance relative to its size, reflecting its efficiency-first design.

⬤ The launch represents a broader trend toward smaller, highly capable open-source models that offer real alternatives to dominant closed-source systems. Rnj-1's ability to achieve competitive results with modest compute requirements could reshape enterprise adoption, cost-sensitive deployments, and competitive dynamics in AI. As efficiency becomes a critical bottleneck in both training and inference, Rnj-1 shows how purposeful engineering can reset expectations for what next-generation AI systems should deliver.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi