⬤ Fresh benchmark data making waves in AI circles shows the DePaola-5 memory system crushing the decades-old HRR approach in head-to-head testing. The new holographic transformer representation keeps retrieval rock-solid under the same dimensional limits where HRR completely falls apart.

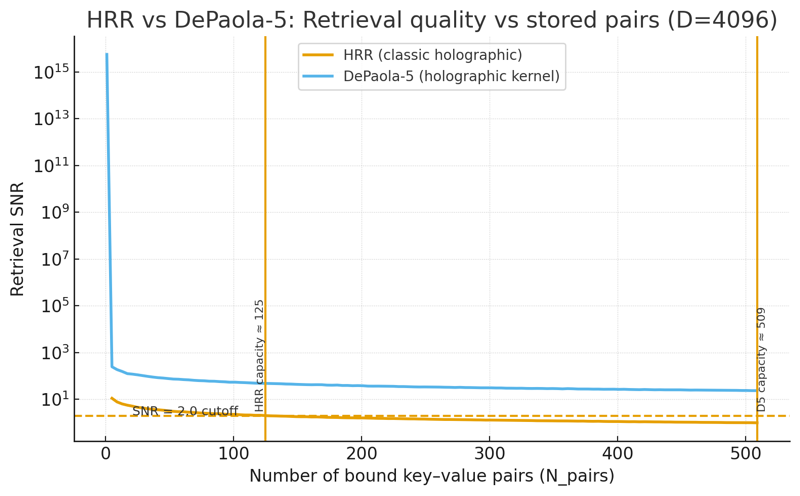

⬤ The comparison chart tells a striking story. HRR, which dates back to the 1980s, starts degrading fast once you pack in more than 100 key-value pairs into a single vector. By 125 items, it hits the SNR cutoff where you're basically pulling noise instead of useful data. Meanwhile, DePaola-5 stays strong across the entire test range and keeps performing cleanly beyond 500 stored bindings. The numbers show roughly 4× the storage capacity with signal quality staying about 10× better.

⬤ The gap comes down to how each system handles interference. HRR blends symbolic structures but can't scale because noise builds up too fast—a problem baked into its original design. DePaola-5 uses a holographic kernel built specifically for today's AI workloads, managing structured interference while keeping recall stable even when bindings get dense.

⬤ This matters beyond just the benchmark. As AI models keep growing and companies hunt for better compute efficiency, smarter memory representations might matter as much as throwing in more layers or parameters. A system like DePaola-5 could change how attention mechanisms develop, cut down on inefficient depth stacking, and push the field toward internal memory that actually handles complex tasks without breaking down.

Usman Salis

Usman Salis

Usman Salis

Usman Salis