⬤ The growing AI presence across tech platforms highlights how applications handle information, with context engineering emerging as the foundation for next-generation systems. Expanding context windows in large language models doesn't address the core challenge of delivering accurate responses. The new discipline designs the architecture around the model, controlling how information gets gathered, filtered and delivered at the right moment.

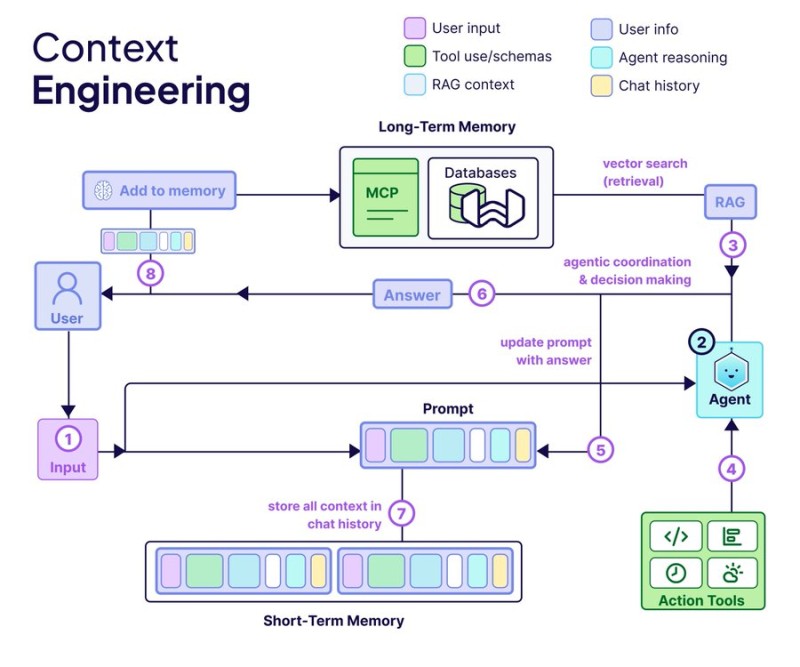

⬤ Agents form the decision-making core, evaluating existing knowledge, identifying data gaps, selecting tools and adapting when tasks change. Query augmentation transforms vague user input into precise instructions, ensuring downstream components work with clarity. Retrieval modules connect the model to external knowledge bases through vector search and structured chunking, pulling only contextually relevant information while keeping prompts efficient.

⬤ Memory systems split between short-term conversational context and long-term persistent storage accessible across interactions. Prompting strategies like structured reasoning or few-shot examples help models apply retrieved data accurately. Integrated tools enable external actions, letting AI systems interact with live data, execute workflows and handle complex tasks beyond simple responses.

⬤ For tech companies embedding AI across their platforms, context engineering represents a shift from raw model size to intelligent orchestration. This evolution emphasizes efficient information flow, reliable external data grounding and modular tool integration. As AI moves toward production deployment, architectures built on context engineering will likely define how capabilities are delivered and how systems maintain coherence through extended interactions.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah