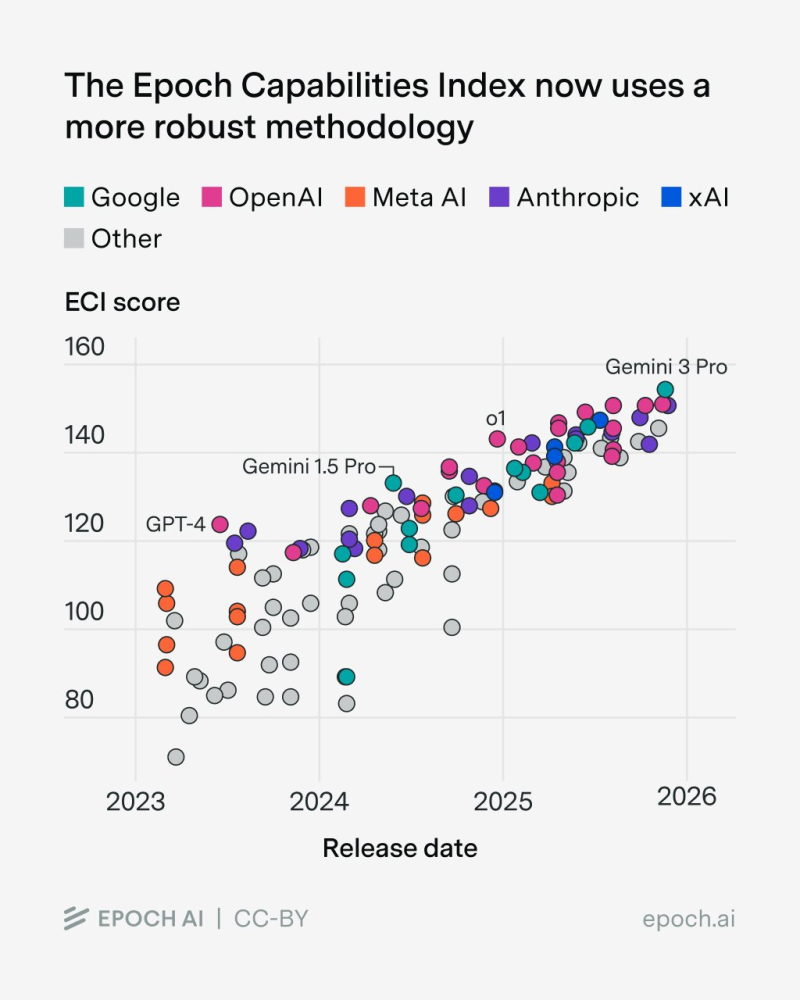

⬤ Epoch AI rolled out a major update to its Epoch Capabilities Index, changing how it evaluates cutting-edge AI systems. Instead of scoring different configurations separately—like varying context lengths or reasoning modes—the index now picks each model's strongest result across all settings. This gives us one clear capability score per model, making head-to-head comparisons much more straightforward.

⬤ The updated charts reveal how AI performance has evolved from 2023 through 2026. Earlier models like GPT-4 hover around the 110-120 range, while newer releases—including OpenAI's o1, Anthropic's latest systems, Meta AI models, and Google's Gemini 1.5 Pro and Gemini 3 Pro—push into higher tiers approaching or breaking past 140. "By applying a best-score methodology, the index now reflects a more coherent, representative view of AI performance across companies," the team noted.

⬤ The old approach created messy comparisons because models with multiple test configurations got counted several times, distorting the overall picture. Now, by consolidating results into a single best-score metric, the index shows capability progression more clearly and gives a fairer view of how major players like Google, OpenAI, Meta AI, Anthropic, and xAI stack up against each other.

⬤ This upgrade comes at a crucial time when AI benchmarks heavily influence expectations across tech and finance sectors. A more reliable capability index provides better visibility into competitive dynamics, highlights how fast model performance is accelerating, and gives everyone a clearer foundation for predicting where AI-driven innovations are headed next.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi