⬤ ByteDance rolled out a fresh diffusion-based code model that balances solid reasoning chops with seriously quick generation speeds. The model hits over 83 on HumanEval and was trained on more than one trillion tokens, putting it right up there with the best open code models you can get your hands on today.

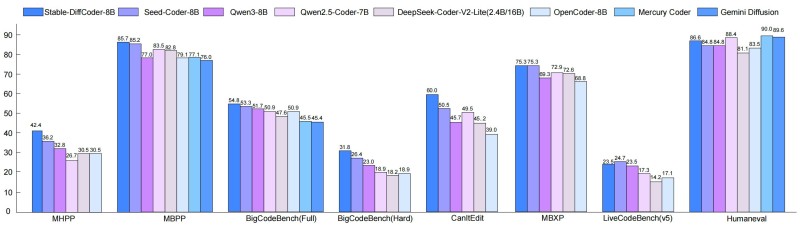

⬤ Benchmark comparisons show how this diffusion model stacks up against other popular code models across HumanEval, MBPP, BigCodeBench, and LiveCodeBench v5. On HumanEval specifically, ByteDance's model lands in the low-to-mid 80s—basically matching or beating several established models of similar size. The performance holds steady across multiple tests rather than just acing one benchmark.

⬤ The real game-changer here is inference speed. Thanks to its diffusion architecture, this model cranks out tokens-per-second up to 100 times faster than comparable autoregressive models. That's huge for large-scale or real-time coding work where every millisecond counts. Releasing it under an MIT license means developers and researchers can jump in without restrictions.

⬤ The combination of strong HumanEval numbers and lightning-fast inference signals growing momentum behind diffusion approaches for code generation. When you get both accuracy and speed at this level, it opens doors for next-gen developer tools and automated coding workflows. This release pushes the entire AI ecosystem forward on both performance and practical deployment.

Victoria Bazir

Victoria Bazir

Victoria Bazir

Victoria Bazir