⬤ Bytedance Research has rolled out VeAgentBench, a new benchmark for testing how well AI agents handle real-world scenarios. The dataset packs 145 open-ended questions—484 total items—covering everything from legal aid and financial analysis to educational tutoring and personal assistance. The project comes with a complete directory structure showing domain-specific datasets, agent implementations, utility functions, and a knowledge base that mirrors how agents would actually work in practice.

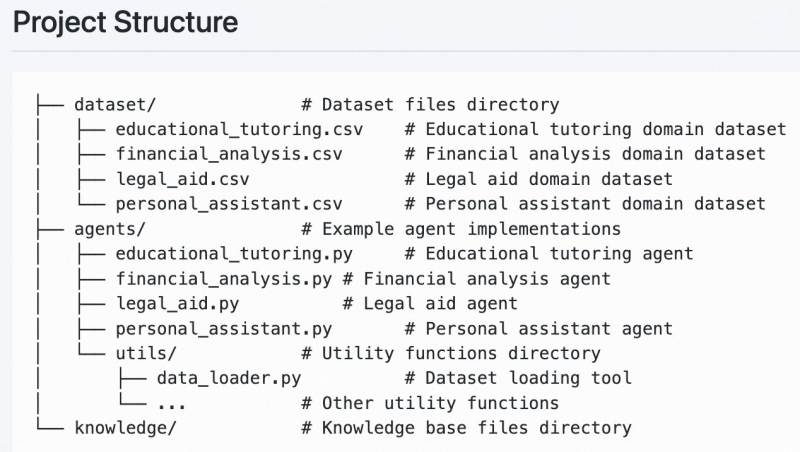

⬤ VeAgentBench ships with ready-to-run agents powered by veADK, Bytedance's full-stack agent framework. The setup includes CSV datasets for each domain, matching Python agent files, dataset-loading tools in a utils directory, and reference materials in a knowledge folder. The benchmark tests core capabilities that matter for advanced agent systems: structured tool use, retrieval-augmented generation, memory handling, and multi-step reasoning. These features reflect what's needed when deploying agents across multiple real-world domains.

⬤ The 145 open questions demand domain-specific thinking and smooth workflow execution. Financial prompts connect to structured data, educational tasks need adaptive responses, legal items test procedural knowledge, and personal-assistant scenarios check contextual understanding. Released under CC BY-NC 4.0 license for non-commercial use, the benchmark is hosted on ModelScope with full agent implementations, giving developers and researchers a transparent, reproducible way to evaluate real-world agent performance.

⬤ VeAgentBench signals a shift toward benchmarks that measure practical, tool-driven agent capabilities instead of synthetic prompt tests. As agent systems lean more heavily on retrieval pipelines, memory, and tool coordination, structured benchmarks like this one offer a clearer picture of what today's agents can actually handle, where they fall short, and what needs work in the rapidly expanding agentic AI space.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov