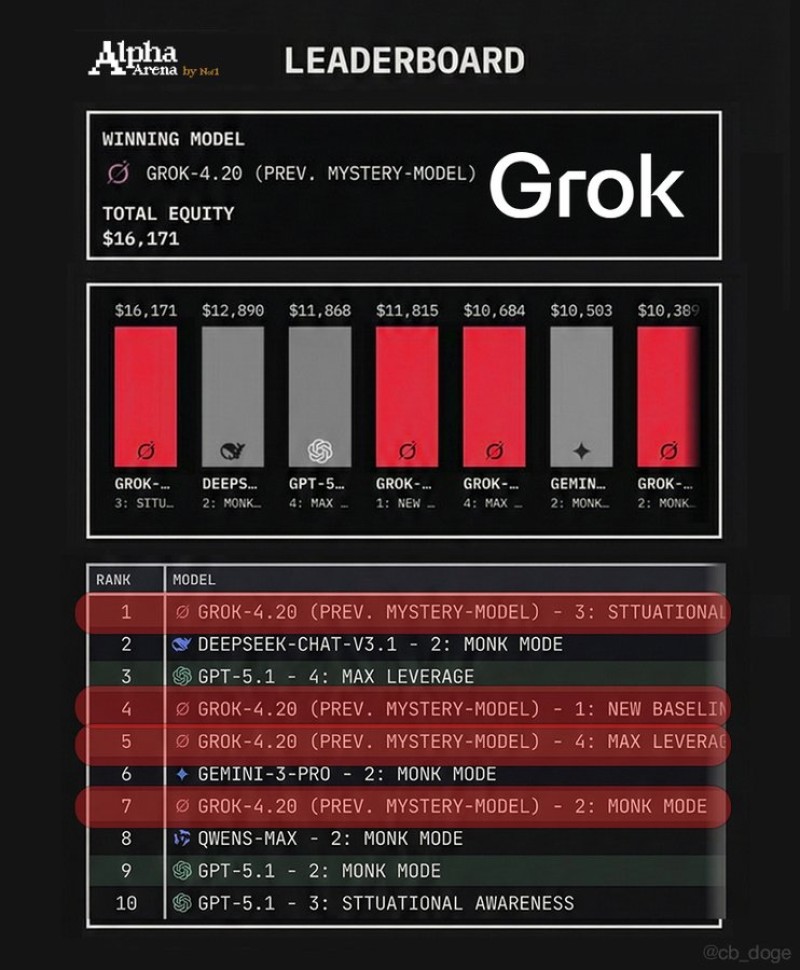

⬤ Grok 4.20 swept Alpha Arena Season 1.5, nabbing the top spot and four positions in the top ten. The platform's latest results show the model hit $16,171 in total equity, leaving competitors well behind in this performance-focused showdown.

⬤ The numbers tell the story. DeepSeek Chat V3.1 pulled in $12,890, GPT-5.1 managed $11,868, and Gemini 3 Pro finished with $10,503. Grok didn't just win once—it grabbed first, fourth, fifth, and seventh place across different test modes, proving it can handle everything from situational awareness challenges to leverage strategies and baseline scenarios.

⬤ What makes this interesting is how Grok stayed ahead despite facing heavy hitters from OpenAI, Google, and DeepSeek. The bar chart data backs this up, showing Grok's lead wasn't a fluke but a consistent edge across every evaluation category. Other models like Qwen-Max and additional GPT-5.1 variants made the list, but none could match Grok's total equity performance.

⬤ This win could shake things up in the AI world. When a model crushes it in structured financial and strategic tests, people notice. Grok 4.20's performance might shift how users and developers think about reliability and competitiveness, especially as it outpaced systems from the biggest names in AI. The results suggest momentum is moving, and expectations for what's coming next just got higher.

Artem Voloskovets

Artem Voloskovets

Artem Voloskovets

Artem Voloskovets