⬤ Researchers at Nanyang Technological University just dropped DynamicVLA - a Vision-Language-Action model that lets robots interact with changing environments in real time. This system finally closes the gap between what robots see and what they do, letting them react on the fly instead of freezing between observation and action.

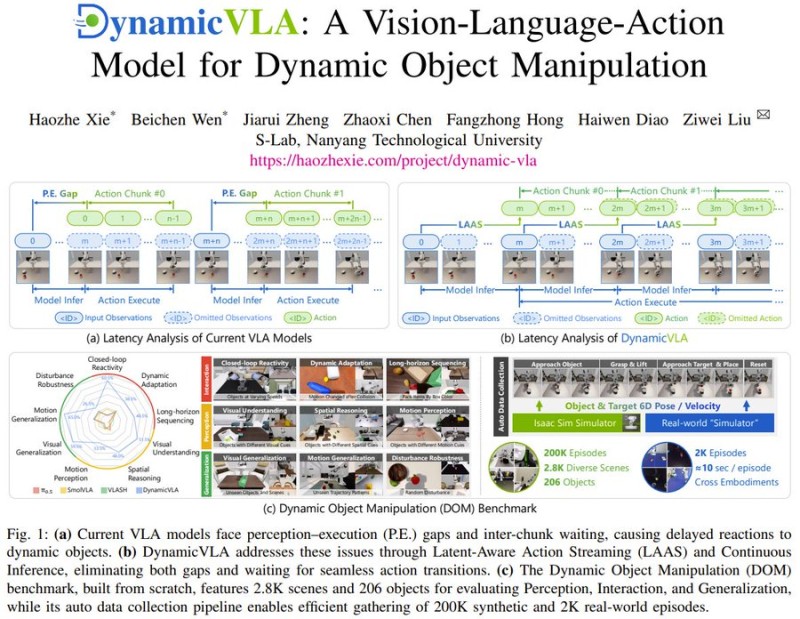

⬤ Here's the problem with older robotic models: they lag. That perception-to-execution delay kills performance when things are moving fast or getting unpredictable. DynamicVLA fixes this with something called Latent-Aware Action Streaming and continuous inference, which basically means robots can flow smoothly from one action to the next. "The system bridges the gap between perception and execution, enabling robots to react immediately," the researchers explained. Now instead of robotic pick-and-place routines, these machines can grab moving objects and roll with disturbances. Similar real-world progress is happening with humanoid welding robots coming to Fincantieri shipyards by 2026.

⬤ The team tested DynamicVLA using the Dynamic Object Manipulation dataset, and the results show better motion perception, sharper spatial reasoning, and solid generalization across thousands of different scenes and objects. The model works in both simulated setups and real-world conditions, proving more agile than previous systems when handling complex tasks. That kind of precision is already showing up in industrial settings - check out how Unitree humanoid robots handle real factory assembly with millimeter precision.

⬤ This development marks a major step toward embodied AI systems that can continuously interact with the physical world. Real-time robotic response is pushing machines beyond scripted automation into actual adaptive behavior in dynamic environments.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov