A breakthrough discovery from Google's AI labs suggests that one of the most effective ways to improve language model performance might also be the simplest: just ask twice. The finding challenges assumptions about how to optimize LLM interactions and opens new possibilities for enhancing AI accuracy without expensive model upgrades.

New Research Shows Dramatic Accuracy Gains from Simple Repetition

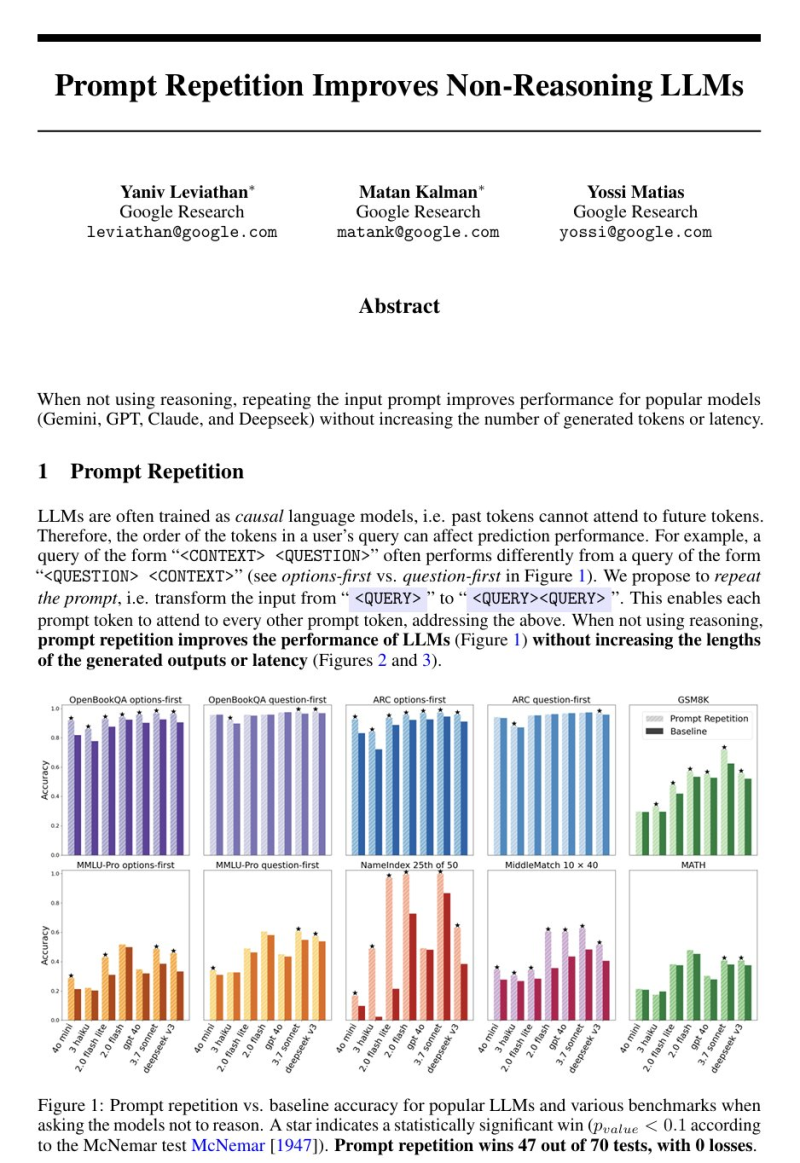

A new Google Research paper titled Prompt Repetition Improves Non-Reasoning LLMs explores a surprisingly effective technique to boost language model performance. The study demonstrates that sending the same prompt twice can significantly increase accuracy across several major model families.

The researchers tested their approach across seven different benchmarks and multiple AI systems including Gemini, GPT-style models, Claude, and DeepSeek. The accuracy improvements ranged from modest to remarkable, with one task seeing performance jump from just 21% to an impressive 97%.

Why Repeating Prompts Works: The Transformer Architecture Explanation

The effectiveness comes down to how transformer models process information. As the research team explains, these models work strictly left to right - each piece of text can only "see" what came before it, never what comes after.

When users place context before a question, the model answers with full knowledge of the context, but the context itself was produced without awareness of the question, the researchers note in their paper.

Repeating the prompt essentially gives every part of the input a second chance. The model can attend to the entire input more symmetrically, processing the relationship between context and question more thoroughly.

Zero Cost, Maximum Benefit

What makes this discovery particularly valuable is its practicality. The paper reports no increase in output length and no meaningful latency impact because input processing happens in parallel on modern hardware. The approach requires no fine-tuning, no additional computational losses, and no complex prompt engineering beyond simple duplication.

The results suggest that performance improvements in large language models may come not only from building bigger architectures but also from smarter interaction patterns. Simple formatting changes to how we structure prompts can materially affect model behavior, highlighting that user input design remains a critical factor in real-world AI performance.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi