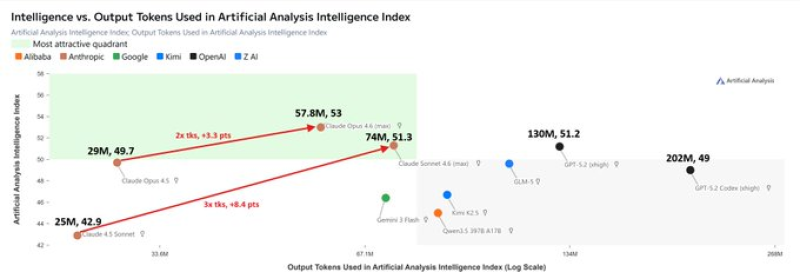

⬤ A fresh AI benchmark comparison is turning heads in the industry as developers race to build smarter, leaner systems. Data from the Artificial Analysis Intelligence Index shows Anthropic's models still crush GPT-5.2-xhigh when it comes to token efficiency, even though newer Claude versions are using more tokens than before.

⬤ The chart stacks up intelligence scores against output token usage across models from Anthropic, OpenAI, and Google. Claude 4.6 burns through more tokens than Claude 4.5 while keeping strong performance scores. Still, Anthropic's systems need roughly half the tokens GPT-5.2-xhigh does to hit similar performance levels, keeping them ahead in the efficiency game even as their usage climbs.

⬤ But OpenAI might be closing in fast. The update points to serious gains coming in a future model. GPT-5.3-Codex-xhigh reportedly used just 44k tokens compared with 92k tokens in GPT-5.2-Codex on SWE-Bench Pro - a massive 53% drop. If that kind of improvement carries over to the main index, projected usage could drop to around 61.1M tokens, possibly pushing past Anthropic in efficiency while boosting performance.

⬤ These efficiency gaps matter beyond bragging rights. They directly impact computing costs and deployment economics, shaping how businesses choose between models. The competitive pressure keeps ramping up, as highlighted in OpenAI warns Congress China distilling AI models to catch up in tech race.

⬤ Meanwhile, rapid growth among AI providers continues reshaping the landscape. Anthropic's explosive trajectory shows just how fast things are moving, detailed in Anthropic hits $380B valuation after revenue jumps from $1B to $14B in 14 months.

Usman Salis

Usman Salis

Usman Salis

Usman Salis