⬤ A new AI framework designed to improve complex image-editing workflows has been introduced, bringing enhanced reasoning capabilities to generative systems. The model, called REASONEDIT, uses multimodal large language model thinking and a structured reflection process to interpret high-level editing instructions and refine results across iterative rounds. The approach tackles limitations of traditional editors that struggle with abstract, concept-based modifications.

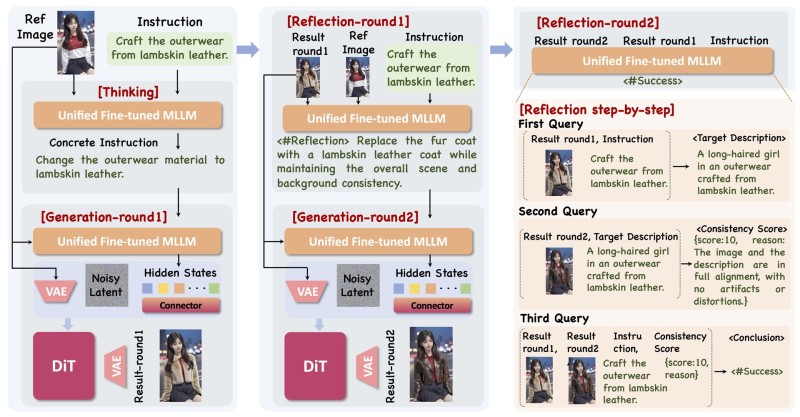

⬤ REASONEDIT's multi-stage reasoning pipeline starts with a unified fine-tuned MLLM analyzing the input instruction and converting it into a concrete editing command. For example, "Craft the outerwear from lambskin leather" gets translated into a specific material-change directive, which guides the first image-generation round through a DiT-based architecture connected to VAE latents and hidden-state representations. After producing the initial output, the system enters a reflection phase where it reassesses how closely the image aligns with the intended edit. This step generates a refined description, explicitly replacing a fur coat with lambskin leather while preserving the scene and background.

⬤ The second generation round incorporates this refined reasoning, resulting in an improved image that better matches the original instruction. A built-in evaluation module assigns a consistency score based on how accurately the output reflects the requested change. In the example shown, the system generates a full score of 10, confirming the image and description are fully aligned without artifacts or distortions. The framework can repeat this cycle until the model determines that the visual result, reference image, and textual instruction are consistent. By integrating MLLM-driven reasoning directly into the latent-editing pipeline, REASONEDIT enhances semantic accuracy and reduces common attribute-editing failures.

⬤ The introduction of REASONEDIT shows how reasoning-enhanced models are reshaping expectations in generative AI. As image editing tasks become more sophisticated across creative, commercial, and technical domains, systems that can interpret abstract instructions and self-correct through iterative reflection may set a new standard for precision and reliability. The integration of step-by-step reasoning with high-fidelity visual generation highlights a broader shift toward more intelligent and controllable AI-assisted editing tools.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov