● In a recent post, Rohan Paul shared news that Meituan—China's answer to DoorDash—has dropped LongCat-Video, a powerful video generation model packing 13.6 billion parameters. Released under the MIT open-source license on Hugging Face, this model is turning heads in China's AI scene. It's a unified framework that handles Text-to-Video, Image-to-Video, and Video-Continuation all in one go, using a diffusion-based approach.

● LongCat-Video runs on a Diffusion Transformer (DiT) architecture with 48 layers and 4096 hidden width. The technical backbone includes 3D attention and cross-attention mechanisms, 3D rotary embeddings, and RMSNorm. For compression, it uses a WAN 2.1-style variational autoencoder (VAE) and brings in umT5 for text encoding. The whole setup is designed to generate minutes-long, high-quality 720p videos at 30fps while keeping things temporally consistent—something that's notoriously tricky in diffusion-based video work.

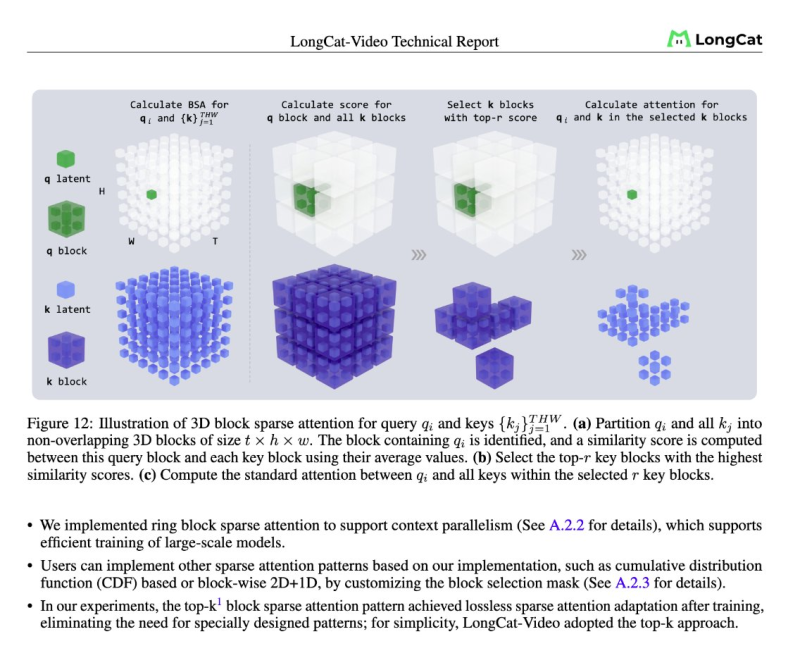

● On the performance side, Meituan's engineers cooked up a 3D Block Sparse Attention mechanism that cuts computational load to less than 10% of what you'd normally see with standard diffusion operations. This means they're getting 10× faster 720p inference on just a single NVIDIA H800 GPU. The model works in stages: it first renders a 480p 15fps sequence, then upscales and refines it to 720p 30fps using a LoRA-based refinement expert. It's a smart trade-off between speed and visual quality, helping avoid the flickering and motion drift that can plague long video sequences.

● The training approach is equally interesting. The team used multi-reward GRPO reinforcement learning, which combines a frame quality scorer, motion coherence evaluator, and text-video match judge. This helps boost realism and narrative flow without falling into the "reward hacking" trap. On VBench 2.0, LongCat-Video hit 62.11% overall and 70.94% on commonsense accuracy—putting it roughly on par with leading closed-source models.

● Commenting on the launch, Vaibhav Srivastav pulled a quote from Meituan's paper:

We introduce LongCat-Video, a foundational video generation model with 13.6B parameters, delivering strong performance across Text-to-Video, Image-to-Video, and Video-Continuation tasks.

● Meanwhile, Teortaxes▶️ called the release "Meituan's revenge on Alibaba," applauding the company for doing "serious research beyond me-too training" and noting how quickly they've ramped up their AI R&D game.

● With LongCat-Video, Meituan is staking its claim as a serious AI research player, going toe-to-toe with global leaders by releasing transparent, high-performance open-source models that balance efficiency, quality, and scale. It's a move that's reshaping how we think about China's role in the generative AI race.

Peter Smith

Peter Smith

Peter Smith

Peter Smith