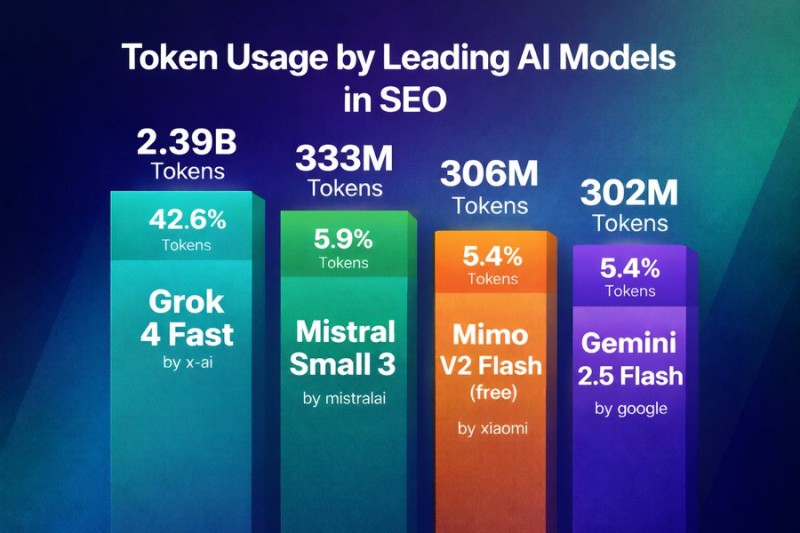

⬤ Grok has taken a commanding lead in AI-powered SEO work, with new token usage numbers showing just how far ahead it's gotten. Grok 4 Fast from xAI chewed through roughly 2.39 billion tokens—grabbing 42.6% of all token usage across major AI models handling SEO tasks. That's a massive gap between Grok and everything else in the space.

⬤ The numbers tell a pretty clear story. Mistral Small 3 managed about 333 million tokens (5.9% share), while Mimo V2 Flash hit around 306 million tokens (5.4%) and Gemini 2.5 Flash came in at roughly 302 million tokens (also about 5.4%). These models are getting used, sure, but we're talking hundreds of millions versus billions—it's not even close.

⬤ What this really means is that Grok's being deployed on a completely different scale for SEO work. When you're seeing token counts in the billions, that's not just testing—that's serious, ongoing content generation, indexing, and optimization at enterprise levels. Companies doing AI-powered SEO are clearly betting on systems that can handle massive workloads without choking, and right now Grok 4 Fast seems to be the go-to choice.

⬤ This shift shows how actual usage data is becoming the real test for AI platforms. As SEO gets more automated and data-hungry, token consumption gives us a window into which models people actually trust for the heavy lifting. Grok's dominance here signals that the AI race isn't just about fancy features anymore—it's about which systems can scale up and deliver when you're running real operations day in and day out.

Usman Salis

Usman Salis

Usman Salis

Usman Salis