⬤ Arcee has rolled out its latest AI model family, introducing the Trinity Mini and Trinity Nano series built on mixture-of-experts architectures. The lineup includes a 26-billion-parameter model with 3 billion active parameters and a 6-billion-parameter version with 1 billion active parameters. The update also brings new reasoner models and refreshed base models, expanding Arcee's reach across both lightweight and mid-scale categories.

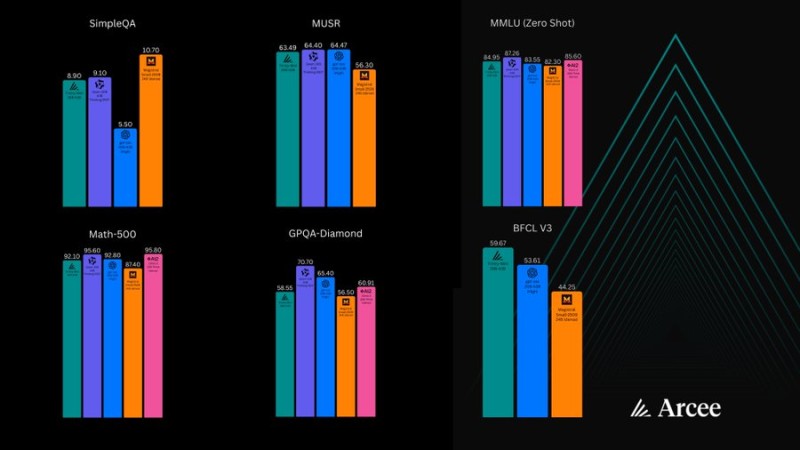

⬤ Benchmark data from the announcement shows how the new models perform across several evaluation suites. Results for SimpleQA, MUSR, Math-500, GPQA-Diamond, MMLU zero-shot, and BFCL V3 demonstrate solid accuracy relative to competing baselines. In SimpleQA and Math-500, the models hold their ground against other entries, while MUSR and GPQA-Diamond illustrate consistent positioning in the comparison charts.

⬤ The company emphasized that these models use efficient MoE routing to activate just a fraction of total parameters during inference, sticking to the 3-billion and 1-billion active-parameter structures. The dedicated reasoner models are designed to support more advanced logical reasoning and structured task execution, while the new base models offer broader flexibility for downstream applications that don't need specialized reasoning components.

⬤ The launch reflects the ongoing push within the competitive AI landscape. With clear benchmark results across multiple academic tasks and a focus on parameter-efficient design, the Trinity Mini and Trinity Nano releases show how developers are balancing performance, cost, and scalability as the market shifts toward increasingly specialized AI systems.

Artem Voloskovets

Artem Voloskovets

Artem Voloskovets

Artem Voloskovets