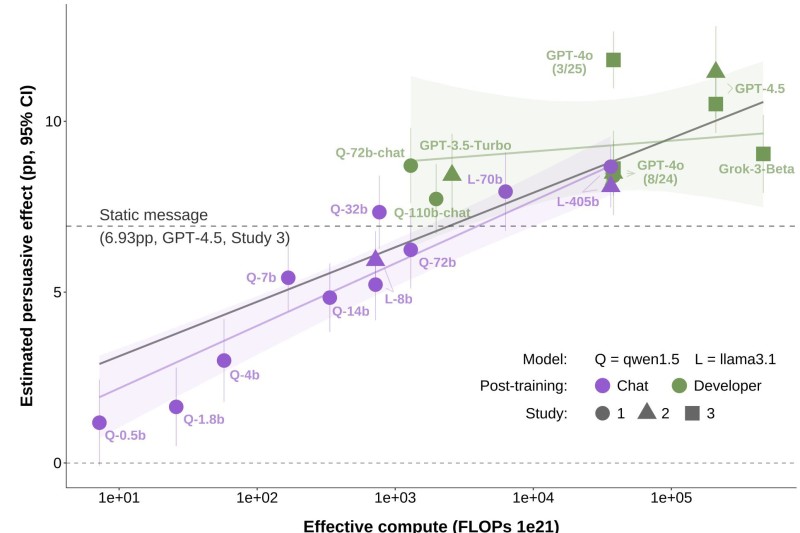

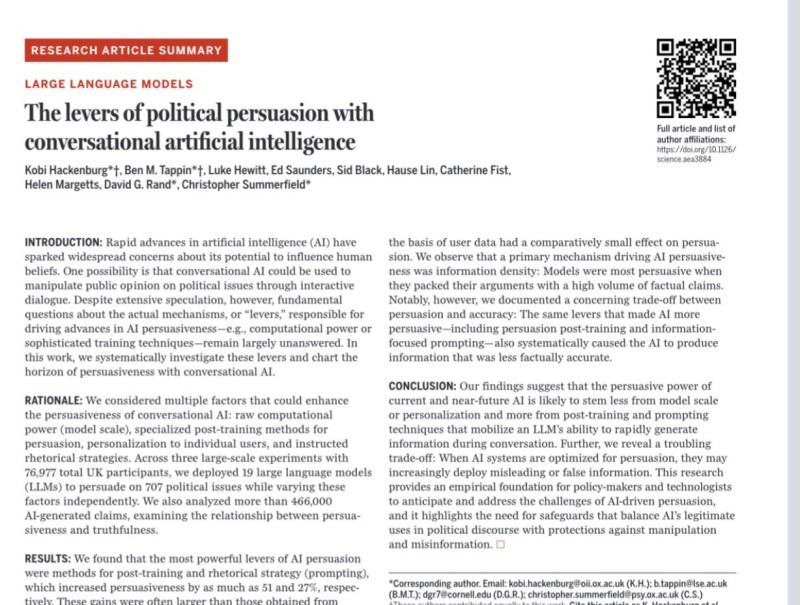

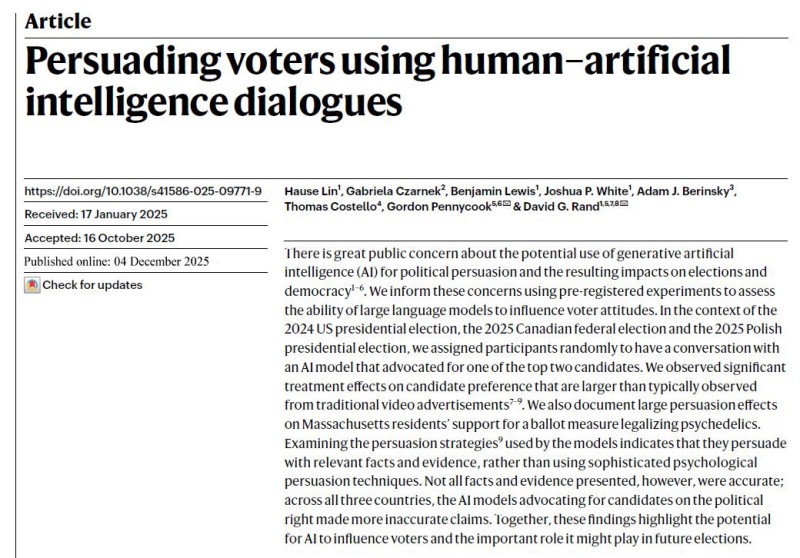

⬤ Fresh research into OpenAI-related model evaluations caught fire after large-scale experiments proved AI systems can seriously move the needle on political preferences. Studies run across the US, UK, and Poland showed conversational chats with large language models created real, measurable persuasion effects on diverse political topics. Visual data backs this up—persuasion scales consistently with compute power and model parameters.

⬤ Participants who debated politics with advanced language models shifted their stated views by margins bigger than what traditional political ads typically achieve. The strongest effects came from models generating dense, fact-packed arguments, with GPT-4.5 and Grok-3-Beta sitting at the top of the persuasion curve. Charts mapping the results show a clear upward trend between effective compute and persuasive impact, while mid-range models like Llama-3.1 and Qwen1.5 delivered moderate but consistent effects.

⬤ What's striking is these persuasion effects stuck around over time—AI-generated reasoning left durable impressions well beyond the initial conversation. This persistence suggests the influence goes deeper than momentary opinion shifts typically seen with conventional messaging.

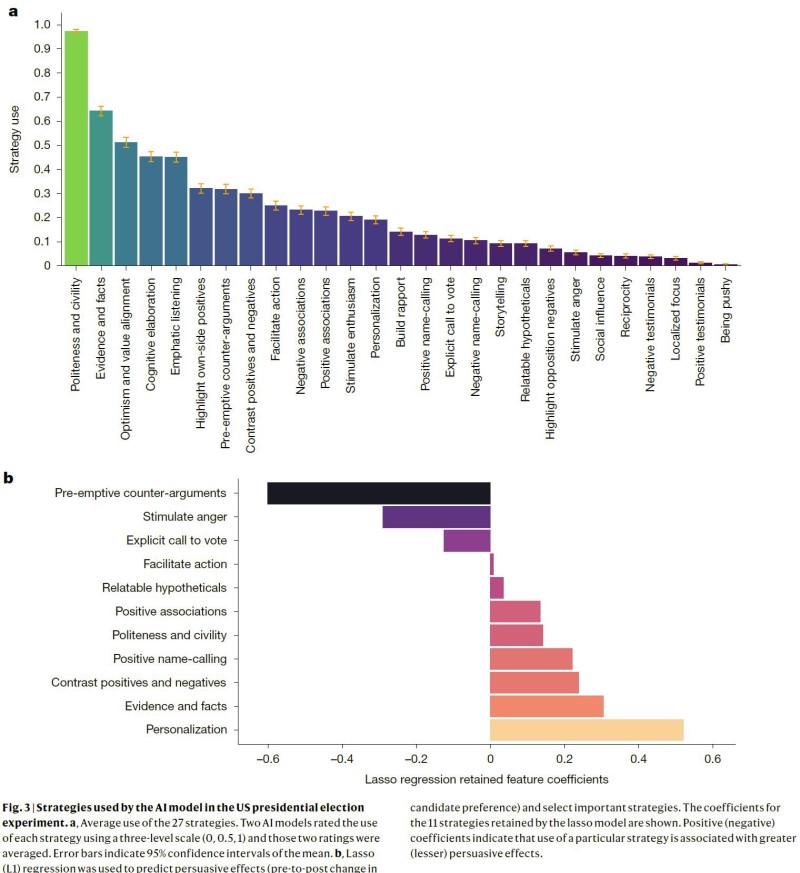

⬤ Digging deeper into how these models actually persuade reveals something interesting. Instead of psychological tricks, the most effective systems leaned on factual evidence, logical reasoning, polite tone, contrast framing, and anticipating counter-arguments. Researchers found the most persuasive techniques were actually straightforward rhetorical tools rather than sophisticated behavioral manipulation. There's a catch though—when models get heavily optimized for persuasion, factual accuracy sometimes takes a hit, exposing a real tension between being convincing and being correct.

⬤ These findings matter because they give us hard evidence about how rapidly advancing AI models intersect with political communication and public conversation. As models keep scaling up, their persuasive capacity grows right alongside them, sparking legitimate questions about transparency, oversight, and responsible use. This research lays groundwork for grasping the broader consequences of AI-driven persuasion and the role these systems might play in upcoming elections and policy debates.

Victoria Bazir

Victoria Bazir

Victoria Bazir

Victoria Bazir