⬤ Perplexity rolled out two safety tools—BrowseSafe and BrowseSafe-Bench—to protect against prompt-injection attacks in AI browsers. The company fine-tuned Qwen3 30B to scan raw HTML and spot malicious commands before users interact with the Comet Assistant. Prompt-injection vulnerabilities have become a growing concern across AI platforms, pushing developers to build stronger defenses into browser-based assistants.

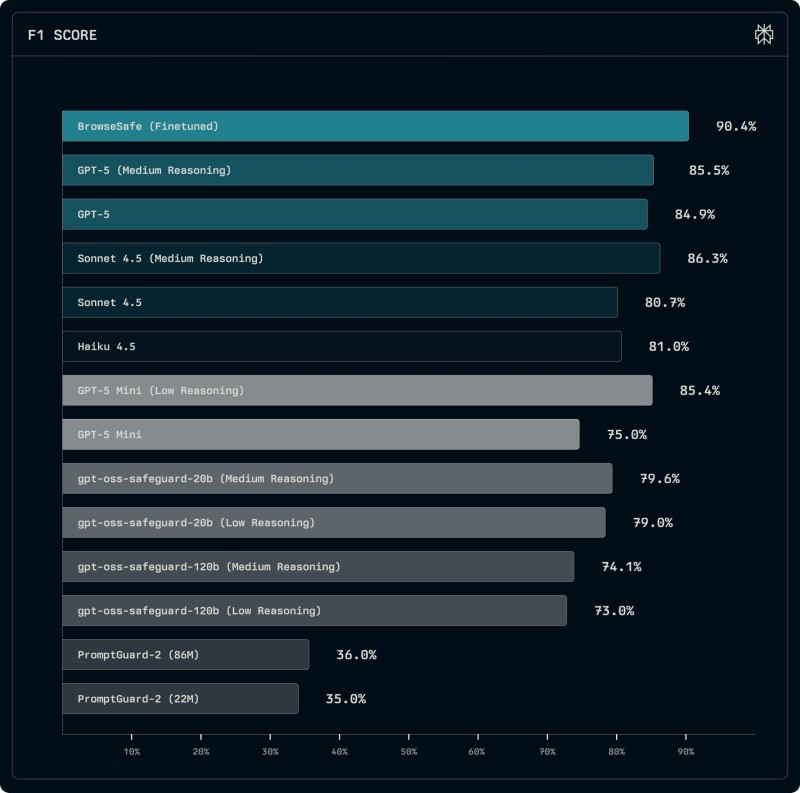

⬤ Testing data shows BrowseSafe (Finetuned) hitting a 90.4% F1 score, putting it ahead of competing systems. GPT-5 (Medium Reasoning) reached 85.5%, while standard GPT-5 scored 84.9% and Sonnet 4.5 (Medium Reasoning) landed at 86.3%. Other models like Sonnet 4.5 (80.7%), Haiku 4.5 (81.0%), and various GPT-5 Mini versions ranged from mid-70s to mid-80s. Lower-tier PromptGuard-2 models barely crossed 35-36%, showing the widest performance gap in the benchmark. These numbers position BrowseSafe well above current safety baselines.

⬤ BrowseSafe works as an active detection layer, catching HTML-based prompt injections before they hit core model functions. This tackles a known weak spot in AI browsing tools, where hidden instructions can bypass safety protocols or manipulate responses. By releasing both the model and benchmark as open-source, Perplexity opened the door for outside testing and development. The company positioned Comet as potentially "the safest AI browser" based on these results.

⬤ Strong benchmark performance points to security becoming central in AI product design. As browser assistants and automated agents gain more capabilities, reliable threat detection matters more than ever. Better F1-based systems could shift safety standards across the industry as companies integrate real-time protection directly into user tools.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov