⬤ As Apple enlarges its presence in AI, interest is moving toward fresh methods that change the way builders create autonomous systems. LangChain recently drew notice to a young discipline called agent engineering, a framework that steers developers away from classic software models toward systems built to survive erratic, real world conditions. Agent engineering fuses product strategy engineering execution and data science to steer AI agents when they meet vague inputs plus shifting scenarios.

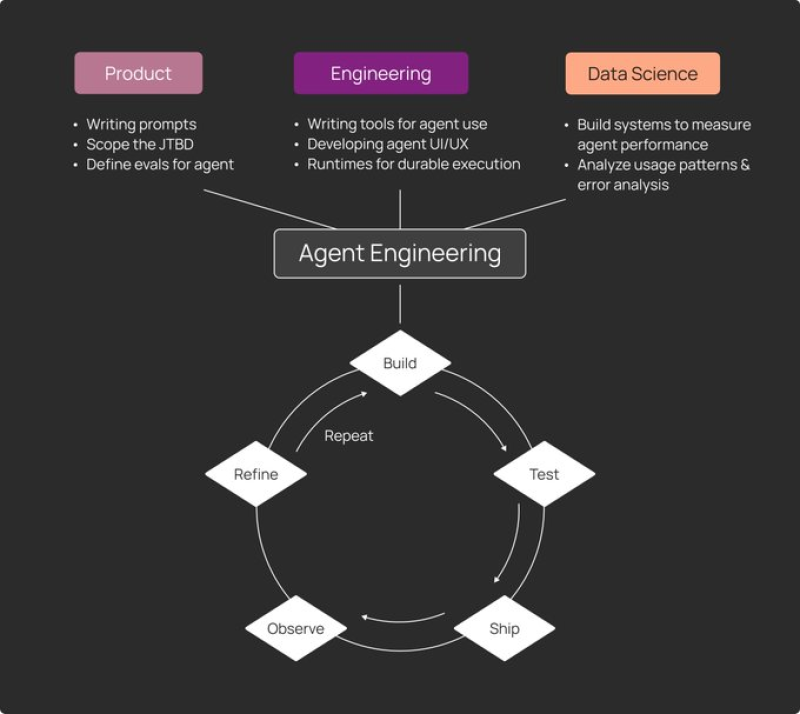

⬤ The change appears logical once the limits of older software frameworks are weighed. Traditional systems expect steady behaviour and follow fixed paths - yet modern AI agents must adapt in real time - they reply to unclear instructions, mutate data but also live interaction. LangChain splits duties among three core teams. Product groups state prompts and evaluation rules. Engineering groups construct tools as well as runtime environments. Data science groups watch performance through usage metrics and error logs. Those parts repeat the same cycle - build, test, ship, observe, refine - to render agents steadier or more effective over months.

Meaningful improvements appear only after agents run in live production, where sudden failures and behavioural gaps turn visible.

⬤ LangChain claims that real progress starts once agents run in production, where true failures also gaps surface. Teams iterate without pause - they adjust prompts, tune tools and polish user flows from field observations. This matches a wider industry pattern in which AI firms including firms linked to Apple's ecosystem, now highlight reliability next to controllability as prime selling points for commercial use.

⬤ The rise of agent engineering shows how intricate autonomous AI models have grown and why sustained iteration is now compulsory, not optional. For the market this development signals possible shifts in software development cost, infrastructure demand plus competitive stance across the sector. While companies like LangChain formalise those practices, they set operational norms that could mould expectations for AI deployment and performance for years ahead.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi