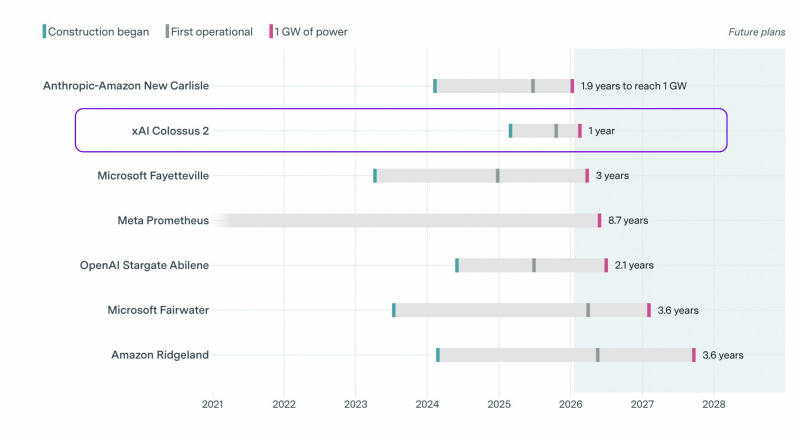

⬤ xAI's Colossus II data center just hit a major milestone—reaching 1 gigawatt of power capacity in roughly one year. A recent comparative chart puts this timeline side by side with major AI infrastructure projects from Microsoft, Meta, Amazon, Anthropic, and OpenAI, and the difference is striking. While competitors are taking years to get their power infrastructure online, xAI delivered at a pace that's reshaping how the industry thinks about deployment speed. This execution shows how grid access has become the real bottleneck in AI development.

⬤ The numbers tell the story clearly. xAI Colossus II reached 1 GW in about one year, while Microsoft's Fayetteville project is tracking around three years, Meta's Prometheus sits at roughly 8.7 years, Amazon's Ridgeland is taking about 3.6 years, and OpenAI's Stargate Abilene is looking at around 2.1 years. Even Anthropic's Amazon New Carlisle facility is projected to need close to two years. These timelines reveal massive differences in how quickly major AI companies can actually secure and deliver power at scale.

⬤ What makes this particularly significant is that power availability—not GPU supply—has become the main constraint in AI scaling. Many AI operators already have advanced hardware sitting ready, but they're stuck waiting for grid connections, transmission upgrades, and power delivery to catch up. Industry leaders have openly acknowledged that electricity access and infrastructure readiness now matter more for deployment speed than chip availability ever did.

⬤ This shift is reshaping competition in AI around infrastructure execution and energy systems. The xAI Colossus II timeline proves that rapid power deployment can dramatically accelerate AI capacity, while slower grid integration can stall projects even when hardware is abundant. As AI workloads keep expanding, power infrastructure timelines will increasingly determine which companies can scale, which projects stay viable, and who holds the competitive edge long term.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi