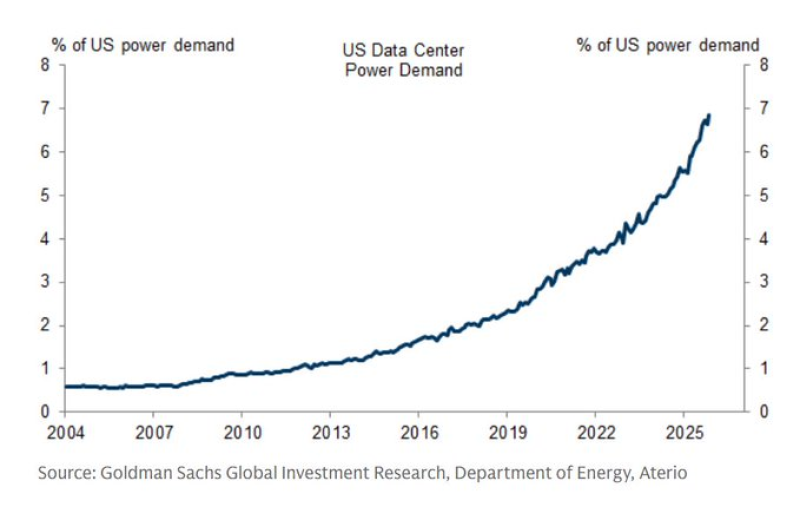

⬤ America's data centers are guzzling electricity at unprecedented rates, and the numbers tell a stark story. Fresh projections reveal that power consumption linked to AI infrastructure isn't just growing—it's accelerating fast. What started as a gradual climb over the past decade has turned into a vertical spike since 2020, with data centers now claiming a much bigger slice of the nation's total electricity pie.

⬤ The shift is forcing a fundamental rethink of what limits AI growth. For years, the conversation centered on computing power—faster chips, better processors, more advanced hardware. But as one industry observer put it, The limiting factor may shift away from computing hardware and toward available energy capacity. That's a game-changer. Building massive AI clusters doesn't just require cutting-edge technology anymore; it demands reliable, continuous power that the grid may struggle to deliver.

⬤ This isn't just a technical problem—it's becoming a political one. Higher electricity consumption is already pushing up energy pricing in some areas, and protests over rising costs have started popping up. Communities are waking up to the fact that AI's appetite for power has real-world consequences for their wallets. The backlash suggests that scaling AI further will require navigating public opinion, not just engineering challenges.

⬤ Looking ahead, the path forward for AI may depend less on smarter algorithms and more on energy innovation. Without breakthroughs in energy production or efficiency gains, even the most powerful models could hit a wall. Grid capacity, renewable sources, and infrastructure investment are now just as critical to AI's future as the technology itself. The race to build smarter machines may ultimately be won or lost in power plants, not laboratories.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov