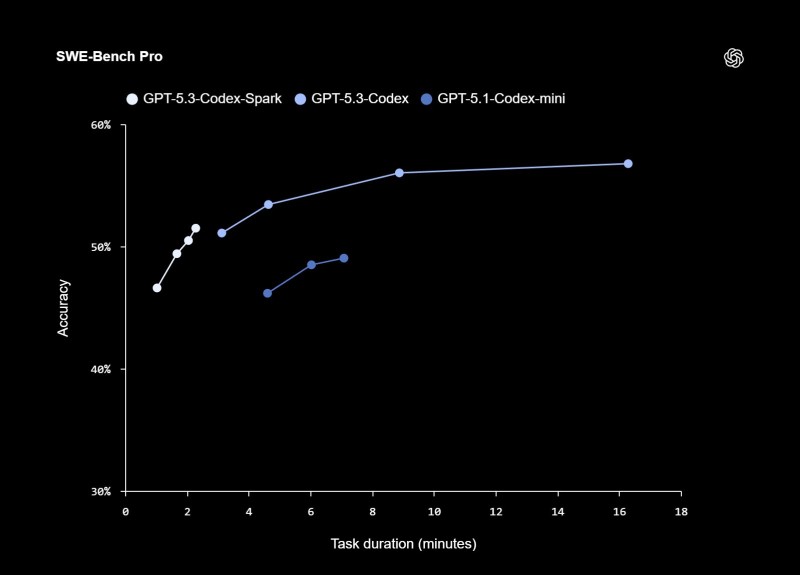

The AI community is buzzing with fresh speculation about OpenAI's latest model. While official specs remain under wraps, performance data has sparked intriguing estimates about the true scale of GPT-5.3-Codex-Spark—and the numbers are massive.

GPT-5.3-Codex-Spark Performance Analysis Reveals Massive Scale

GPT-5.3-Codex-Spark represents the first OpenAI model where the community has attempted serious parameter estimation based on real-world performance metrics.

The preliminary analysis points to approximately 30 billion active parameters, while the total parameter count could range anywhere from 300 billion to a staggering 700 billion.

How Researchers Estimated the 700B Parameter Count

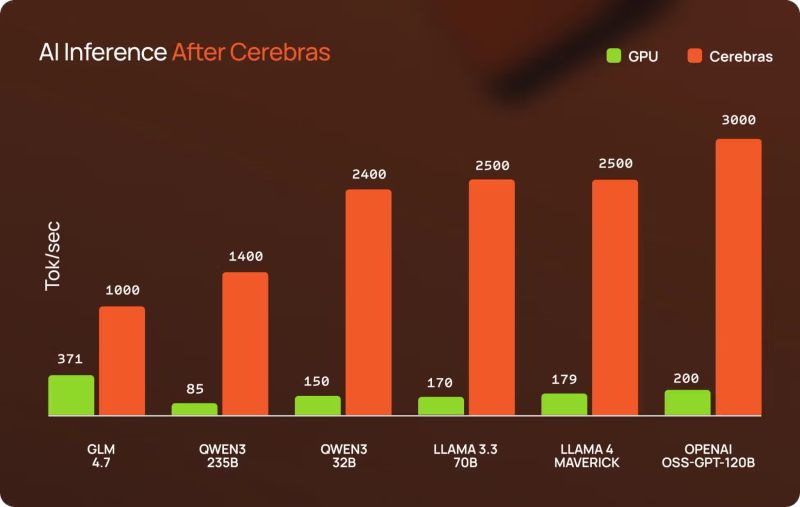

The estimation method relies on Cerebras inference throughput benchmarks. Performance comparisons show GLM-4.7 hitting around 1000 tokens per second, while Qwen3-235B reaches roughly 1400 tokens per second. Meanwhile, GPT-OSS-120B pushes closer to 3000 tokens per second. GPT-5.3-Codex-Spark reportedly delivers "over 1000 tokens/s," positioning it near the lower end of this spectrum.

This placement suggests something counterintuitive: despite its relatively modest inference speed, the model's architecture might lean toward the higher end of the 300-700 billion parameter estimate. The reasoning accounts for sparse activation patterns—a technique where only portions of a massive model activate for any given task, similar to what we've seen in other large-scale systems.

Understanding the Uncertainty Around Parameter Estimates

It's worth emphasizing that these figures represent educated guesswork from the AI research community rather than confirmed specifications from OpenAI. The company hasn't released official parameter counts, and throughput-based estimation involves considerable uncertainty. Different architectural choices, optimization strategies, and hardware configurations can all skew the relationship between model size and inference speed.

Still, the community-driven analysis offers a fascinating glimpse into what might be powering one of OpenAI's newest releases. Whether GPT-5.3-Codex-Spark ultimately proves closer to 300 billion or 700 billion parameters, it's clear the model represents another significant leap in AI scaling—even as questions about its exact specifications remain open.

Usman Salis

Usman Salis

Usman Salis

Usman Salis