⬤ MiniMax has released M2.5, an open-source frontier AI model built for real-world productivity. The announcement showcased impressive performance across coding, search, and agentic tool-calling workloads.

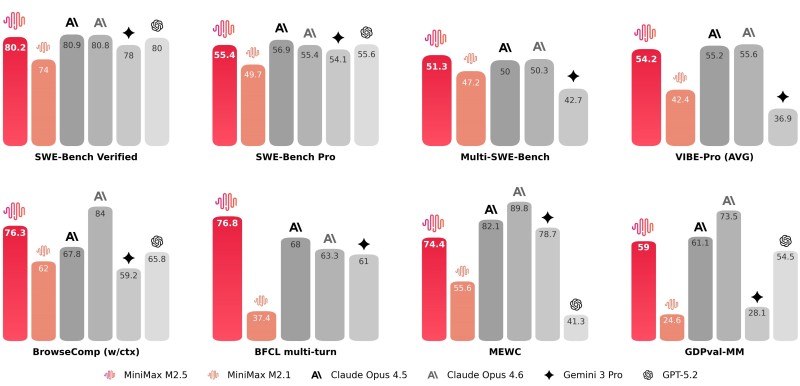

⬤ The model scored 80.2% on SWE-Bench Verified for coding tasks, 76.3% on BrowseComp search evaluation, and 76.8% on BFCL multi-turn tool-calling. A comparison chart released alongside the announcement positions M2.5 among leading AI systems across multiple evaluation categories.

⬤ The system is optimized for efficient execution and handles complex tasks 37% faster than previous iterations. "M2.5 is designed to deliver real-world productivity through open-source accessibility and execution speed," the announcement stated.

⬤ The model operates at roughly 100 tokens per second and is priced at approximately $1 per hour, making it accessible for developers and teams working on continuous agent workflows.

⬤ M2.5's benchmark results reflect its strength in practical AI applications. The 80.2% SWE-Bench Verified score demonstrates coding capabilities, while the BrowseComp and BFCL scores highlight its effectiveness in search and tool-calling scenarios. These metrics suggest the model can handle multi-step reasoning and interaction tasks that are essential for autonomous AI agents.

⬤ The open-source release strategy positions M2.5 as a frontier model accessible to developers without enterprise-level budgets. By combining benchmark performance with execution efficiency and competitive pricing, MiniMax aims to support productivity-focused AI deployments across various use cases.

⬤ The release comes as demand grows for AI models capable of performing complex, multi-turn tasks in coding, research, and automation. M2.5's combination of speed, accuracy, and affordability may appeal to teams building AI-powered tools and workflows.

⬤ More details about M2.5, including documentation and access information, are expected to be available through MiniMax's official channels.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi