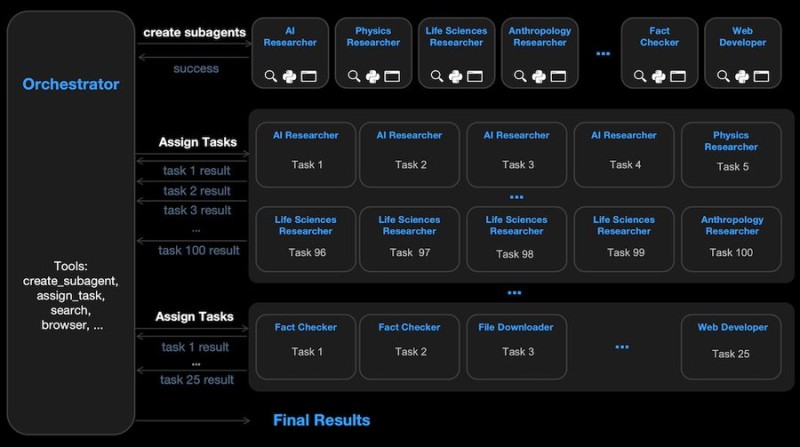

⬤ Moonshot AI rolled out its Kimi K2.5 vision language model, and it's built to run parallel workflows across multiple specialized agents. Instead of tackling one thing at a time, the system splits up tasks like coding, research, web browsing, and fact-checking, then pulls everything together into one coherent answer. The whole setup works through an orchestrator that hands out jobs to different sub-agents and collects what they produce.

⬤ Here's how it actually works: the model dynamically figures out when to assign or shift tasks around rather than just grinding through requests one after another. Each agent sticks to what it does best while the orchestrator weaves all the results into a single response. The announcement claims the model tops open weights benchmarks, especially when it comes to multi-step reasoning scenarios.

⬤ This design marks a real shift toward task-level automation where AI systems handle complicated processes on their own. Instead of spitting out answers in a straight line, the model runs multiple activities side by side to get things done faster. Want more details on how it's performing? Check out Kimi K2.5 leads usage rankings for adoption and usage numbers.

⬤ Why does this matter? Multi-agent orchestration opens up fresh ways to tackle complex digital workflows. When systems can coordinate independent processes like this, it changes what we might expect from future software and automated productivity tools. We're looking at AI that doesn't just respond but actually manages entire workflows internally—that's a different ballgame altogether.

Peter Smith

Peter Smith

Peter Smith

Peter Smith