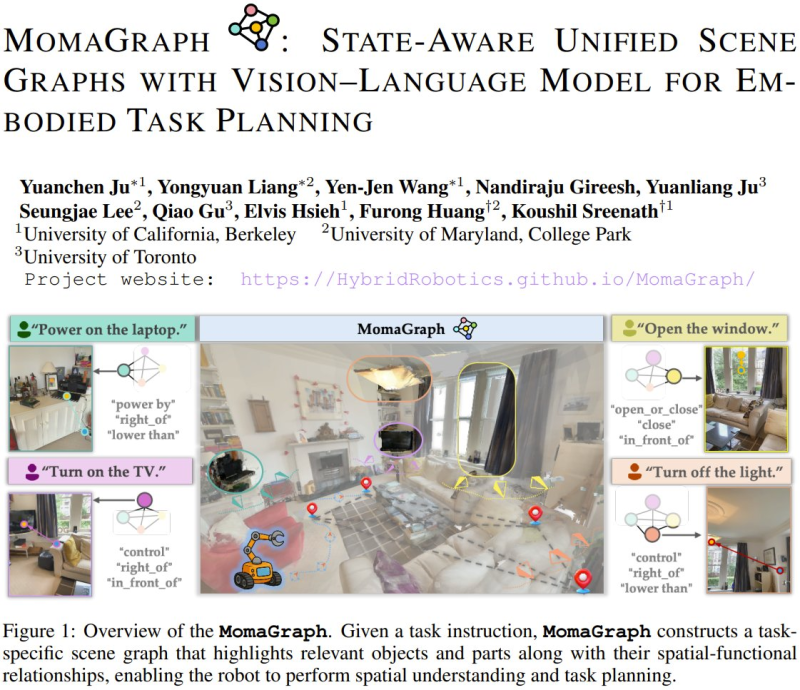

⬤ A team from UC Berkeley, the University of Maryland, and the University of Toronto just rolled out MomaGraph, an AI model that's changing how robots see and interact with the world around them. Rather than treating a room as just a bunch of separate objects, this system creates a complete task map showing how everything connects and what actions a robot can take.

⬤ What makes MomaGraph different is its state-aware scene graphs. The model doesn't just spot objects—it figures out how they relate to each other, identifies which parts can be used, and tracks whether things are open, closed, on, or off. Think of it as giving robots a much smarter understanding of their environment, combining visual info, spatial relationships, and possible actions into one coherent picture.

⬤ The results speak for themselves. MomaGraph hit 71.6% accuracy on a new benchmark designed specifically for robot task planning, beating the next best open-source model by 11.4 percentage points. But here's the real kicker: the system actually works with physical robots in real-world situations, not just in computer simulations.

⬤ This breakthrough matters because getting robots to truly understand their surroundings has been one of the toughest challenges in AI. Better scene comprehension and task planning mean robots can operate more independently in messy, unpredictable spaces. As vision language models keep improving, systems like MomaGraph could unlock new possibilities for robots in homes, factories, and service industries.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov