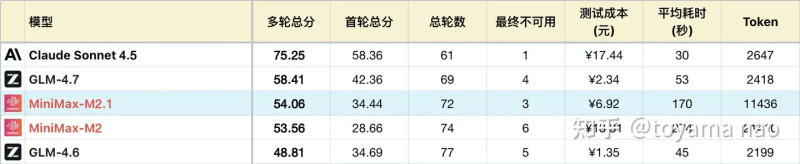

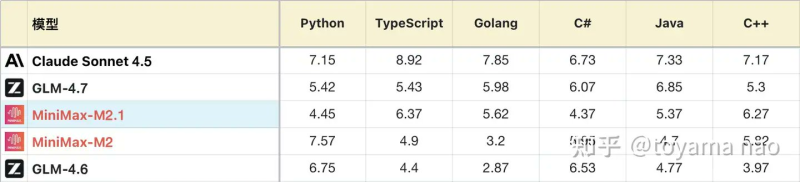

⬤ MiniMax just dropped its new multilingual AI model M2.1, and it's already making waves in the coding world. The model went through quite a bumpy rollout—dealing with routing problems, deployment bugs, and several rounds of retesting before everything settled down. What emerged is a model that's taken a step back in logical reasoning compared to its predecessor M2, but made serious gains in coding, particularly when it comes to frontend and app development work.

⬤ The model's gotten noticeably better at following instructions, showing more consistency when you throw multiple constraints at it—whether that's specific formatting requirements or strict word limits. The coding quality has leveled up too, with project organization that feels more like what you'd expect from professional engineering standards. One-shot code generation has improved, though there's still a catch: M2.1 struggles with fixing its own mistakes, so you'll often need to step in and debug manually. On the reasoning front, it performs about as well as M2 but tends to use fewer tokens, which means it's getting to answers more efficiently.

⬤ But it's not all progress. Math performance has actually declined, especially when problems require deeper reasoning or derivations. Spatial intelligence took a bigger hit—M2.1 can barely handle 2D and 3D reasoning tasks that M2 managed without breaking a sweat. The model still hallucinates at similar rates to its predecessor, and accuracy drops off as you feed it longer contexts.

⬤ It looks like MiniMax pushed this release out quickly to stay competitive in the AI race. Even with its weaknesses, M2.1 marks a clear shift toward prioritizing coding and engineering capabilities. As more AI models duke it out in the software development space, the improvements in M2.1 show just how fast the bar keeps rising for what we expect from coding-focused AI.

Peter Smith

Peter Smith

Peter Smith

Peter Smith