● MiniMax (official) just dropped a bombshell: they've released MiniMax M2, an open-source AI model built specifically for coding and autonomous agents. According to the company, M2 goes toe-to-toe with heavyweights like Claude Sonnet 4.5, GPT-5, and Gemini 2.5 Pro — but it's about twice as fast and costs just 8% of what you'd pay for Claude. They're also making it free worldwide for a limited time through their Agent and API platform.

● This is a bold play. By open-sourcing M2, MiniMax is betting they can shake up an AI market dominated by expensive, closed models. It could drive massive adoption among developers and companies looking for powerful AI without the premium price tag. But there are risks too — they might cannibalize their own revenue, and competitors could quickly copy their approach. Plus, by showing off breakthrough efficiency tech, they're likely to attract even more competition for top AI talent.

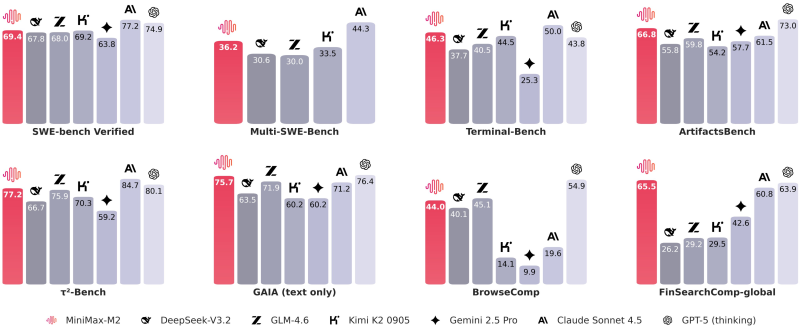

● Performance-wise, M2 delivers. It scored 69.4 on SWE-Bench Verified, 77.2 on τ²-Bench, and 65.5 on FinSearchComp-global — beating or matching Claude and GPT-5 across coding, reasoning, and financial tasks. For developers, that means enterprise-level AI performance without the enterprise-level costs or compute demands.

● M2 fits into a bigger trend: the rise of open, modular AI systems that can handle complex tasks on their own. This launch could change how companies think about AI licensing, infrastructure spending, and even regulatory strategy, as open models start matching what the big labs offer.

● As MiniMax (official) put it: "We're open-sourcing MiniMax M2 — Agent & Code Native, at 8% Claude Sonnet price and ~2x faster." With those numbers and that accessibility, M2 might just reset expectations for what affordable, high-performance AI looks like — and how developers and businesses actually use it.

Peter Smith

Peter Smith

Peter Smith

Peter Smith