⬤ Google's Gemini 3 is turning heads after Browserbase released new computer-use evaluations. The company put the newly launched model through its paces in actual browser environments—clicking buttons, running searches, filling out forms. Gemini 3 posted the highest accuracy numbers among major models and clocked some of the quickest inference times in the testing suite.

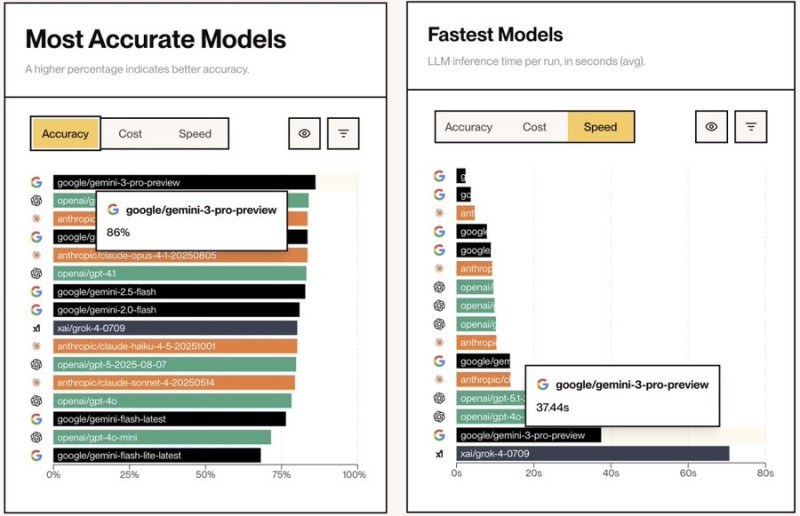

⬤ The benchmark data shows Gemini 3 Pro Preview hitting 86 percent accuracy, topping the Most Accurate Models chart. GPT-5 previews, Claude Opus, and other variants from Google and OpenAI sit lower on the scale. Speed-wise, Browserbase's chart puts Gemini 3 at an average of 37.44 seconds per inference—faster than several heavyweight LLMs and right up there with the quickest systems tested. The numbers point to solid technical performance across categories that matter for automated browser work.

⬤ The evaluation stacks Gemini 3 against Claude, GPT-5, and other frameworks on metrics like cost and latency. While the focus stays on accuracy and speed, the combined rankings suggest the model holds its own across practical use cases. Testing with live browser tasks gives a different angle than standard benchmarks, which typically rely on static datasets rather than actual task completion.

⬤ Gemini 3's strong showing in accuracy and speed could reshape how businesses pick AI systems for workflow automation, browser agents, and productivity tools. When a model performs well in real-world interaction tests, it changes the competitive dynamics between Alphabet, OpenAI, Anthropic, and others—potentially influencing deployment choices and the direction of the AI industry.

Peter Smith

Peter Smith

Peter Smith

Peter Smith