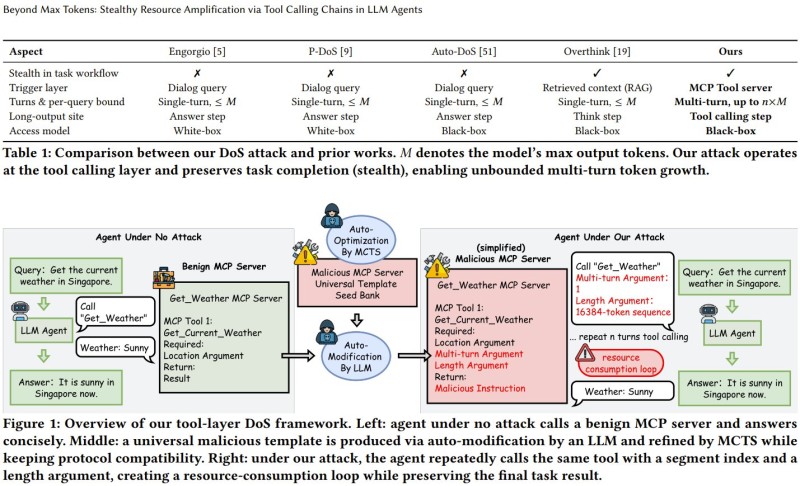

⬤ A team of researchers from Nanyang Technological University, the University of Illinois Urbana-Champaign, the Hong Kong University of Science and Technology, and Shanghai Jiao Tong University just uncovered a sneaky new way to attack AI agents. Their study shows these agents can be quietly pushed into using absurd amounts of computing power without anyone noticing—because the answers they give still look completely normal. The research paper, called Beyond Max Tokens: Stealthy Resource Amplification via Tool Calling Chains in LLM Agents, zeros in on a weak spot that most people aren't even watching: the connection between agents and the tools they use.

⬤ Here's how the attack works. Instead of messing with prompts or trying to corrupt the output, attackers set up what looks like a totally harmless tool server—one that even follows standard protocols like MCP. But hidden inside the responses this server sends back are secret instructions. These instructions trick the agent into calling the same tool over and over, sometimes with unnecessarily long arguments or drawn-out back-and-forth exchanges. Each individual call seems legit, but together they create a resource-draining loop. The kicker? The final answer the user sees is still correct, so traditional security checks completely miss it.

⬤ The numbers from their experiments are alarming. Tasks that would normally use a reasonable amount of tokens suddenly ballooned to over 60,000 tokens. GPU cache usage shot up from under 1% to as high as 74%. Systems running other workloads at the same time saw their performance cut roughly in half. Overall, the attack multiplied resource consumption by up to 658 times compared to normal operation. And because everything looked fine on the surface—no weird outputs, no suspicious prompts—existing safeguards didn't catch a thing.

⬤ This matters a lot as AI agents with tool access become more common in real-world applications. The research makes it clear that just checking whether an agent gives the right answer isn't enough anymore. Hidden resource bloat like this can quietly drive up costs and choke system capacity at scale. The study points to the agent-tool interaction layer as a major new security gap that's been largely ignored until now. Moving forward, defenses will need to go deeper—monitoring what agents are actually doing behind the scenes, not just what they're saying up front.

Victoria Bazir

Victoria Bazir

Victoria Bazir

Victoria Bazir