⬤ AI agents aren't just helping anymore—they're running the show. They're building code, testing it, and pushing it live without human intervention. According to The Hacker News, the biggest danger isn't the AI itself. It's who gets to decide what the agent can access and control. As these tools become standard in business workflows, figuring out how to limit their reach is essential to avoiding disasters.

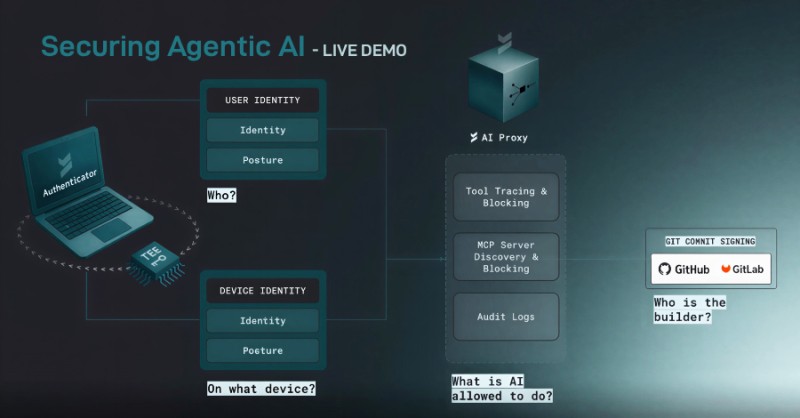

⬤ A recent webinar dug into the messy reality of keeping AI agents secure. Experts talked through practical ways to manage Machine Code Permissions (MCP) and set up access controls that actually work. The big takeaway? Security can't come at the cost of speed. AI agents are handling more complicated tasks every day, so the boundaries need to be clear and enforceable without killing productivity.

⬤ The discussion centered on building access controls that keep AI agents on a tight leash. Even when they're running autonomously, they need to stay within predefined limits. The webinar also covered using audit logs and continuous monitoring to track every action, making sure nothing slips through the cracks.

⬤ As AI gets more sophisticated, companies are scrambling to secure these systems before something goes wrong. The trick is finding the sweet spot between giving AI agents enough freedom to be useful and locking down permissions tight enough to prevent damage. Expect more frameworks and tools focused on safe autonomous AI operations as adoption keeps climbing.

Peter Smith

Peter Smith

Peter Smith

Peter Smith