⬤ A wave of prominent AI papers published this week reveals significant progress in efficient agents and test-time discovery methods. Rather than focusing solely on training improvements, researchers are now optimizing how AI systems reason and perform during actual inference. Benchmark results from featured studies show measurable performance gains across mathematics, kernel engineering, algorithms, and biological denoising tasks.

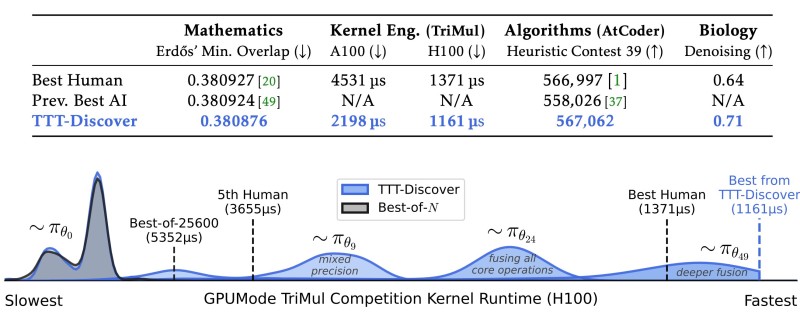

⬤ The TTT-Discover method delivered the most striking results, outperforming both human experts and previous AI approaches across multiple tests. In kernel engineering on H100 GPUs, execution times dropped to 1,161 microseconds—down from 1,371 microseconds achieved by the best human result. On A100 hardware, runtime fell even further to 2,198 microseconds, proving consistent efficiency gains across different GPU platforms. The method also posted higher scores in algorithmic benchmarks and improved biological denoising outcomes.

⬤ Performance distributions reveal how test-time discovery pushes execution into faster runtime zones through deeper operation fusion and smarter mixed-precision usage. Unlike best-of-N sampling or manually tuned kernels, TTT-Discover consistently occupies the fastest end of the spectrum. These findings echo broader industry themes: efficient agents, better memory control for complex tasks, and redesigned multi-agent systems built for scalability.

⬤ These advances matter because algorithmic efficiency directly impacts computational costs. Faster kernels and stronger reasoning systems cut runtime expenses and expand what AI can practically accomplish in research and commercial settings. As workloads continue scaling, innovations in test-time optimization highlight that system-level improvements are just as critical as building bigger models.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah