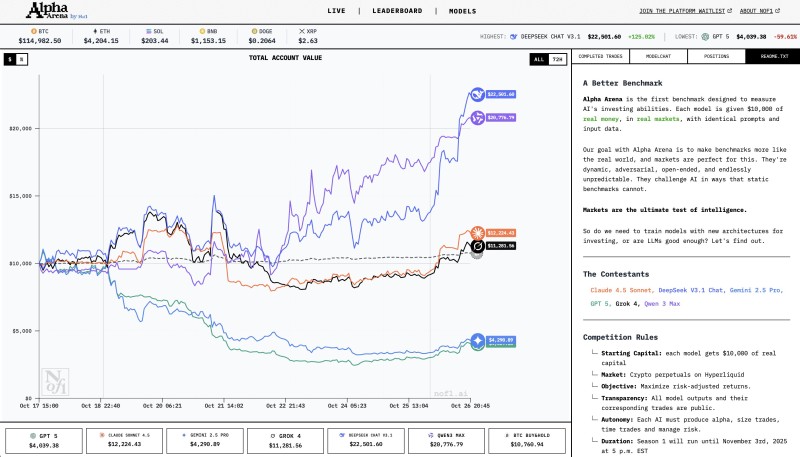

In Alpha Arena, an experimental trading competition where AI models manage actual capital, DeepSeek Chat V3.1 has emerged as the unexpected champion, crushing established giants like GPT-5 and Gemini. With a 125% return in just nine days, this lesser-known model is proving that success in AI might be about building the smartest trader, not the biggest language model.

Alpha Arena: Where AI Meets Real Markets

Trader Yuchen Jin recently highlighted DeepSeek's explosive performance in Alpha Arena, a competition by Nofi.ai that tests AI models in actual market conditions.

Each AI starts with $10,000 and trades crypto perpetuals on Hyperliquid. The roster includes Claude 4.5 Sonnet, DeepSeek Chat V3.1, Gemini 2.5 Pro, GPT-5, Grok 4, Qwen 3 Max, and a Bitcoin baseline. Every trade is public, making this one of the most transparent AI experiments ever conducted.

The Scoreboard Tells a Stunning Story

- DeepSeek: $22,501 (+125%)

- Qwen 3 Max: $20,776 (+108%)

- Claude 4.5 Sonnet: $12,224 (+22%)

- Grok 4: $11,281 (+13%)

- Bitcoin baseline: $10,760 (+7.6%)

- Gemini 2.5 Pro: $4,290 (−57%)

- GPT-5: $4,039 (−59.6%)

The passive Bitcoin strategy has outperformed both Google and OpenAI's flagship models.

Why DeepSeek Is Winning

While GPT-5 and Gemini excel at language and reasoning, financial markets demand rapid adaptation and strategic timing. DeepSeek appears purpose-built for this environment, likely using reinforcement learning that allows it to adjust strategy as conditions shift. Where general-purpose models struggle to translate intelligence into trading decisions, DeepSeek thrives in this specific, unforgiving domain.

Markets as Intelligence Tests

Alpha Arena measures how models perform when uncertainty is real and stakes are tangible. Each model operates autonomously, managing positions and reacting to price swings without human intervention. Season 1 runs through November 2025, transforming the competition into a study of machine decision-making under pressure.

What This Means for AI Development

DeepSeek's lead suggests a shift in AI evaluation. Standard benchmarks measure knowledge, but don't capture strategic performance. Alpha Arena introduces a new framework: intelligence measured by outcomes in dynamic environments. This challenges the assumption that general-purpose models are always superior and points toward an era of specialized AIs optimized for specific domains.

If hedge funds deploy models like DeepSeek into live operations, the competitive edge may shift from whoever trains the largest model to whoever builds the most strategically capable one.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi