⬤ A fresh approach to computer vision is turning heads in the AI research world. Scientists from Peking University and Tsinghua University have unveiled WaveFormer, a vision model that treats image features as signals following a wave equation. Rather than depending entirely on attention mechanisms, this architecture precisely manages how low- and high-frequency information flows through network layers, creating a mathematically solid alternative to traditional Vision Transformers.

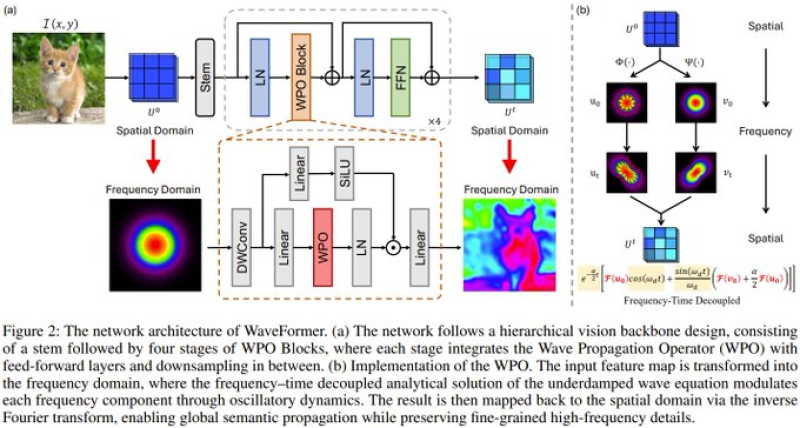

⬤ The network structure shows WaveFormer's hierarchical vision backbone starting with a stem and moving through multiple Wave Propagation Operator blocks. Inside these blocks, feature maps convert into the frequency domain, where a frequency time decoupled analytical solution to the wave equation guides feature propagation. Features then return to the spatial domain, letting global semantic information spread while keeping fine-grained high-frequency details intact. This setup breaks from standard Vision Transformers, which lean heavily on self-attention for capturing long-range spatial relationships.

WaveFormer demonstrates that treating vision features as wave propagation can unlock significant efficiency gains while maintaining accuracy across diverse computer vision tasks.

⬤ Research results show WaveFormer delivering consistent performance boosts across image classification, object detection, and segmentation. The model hits up to 1.6 times higher throughput while cutting computational requirements by roughly 30 percent compared to standard Vision Transformer setups. These numbers prove WaveFormer improves efficiency without trading away accuracy, tackling a critical issue as vision models become increasingly complex and resource-hungry.

⬤ This matters for the wider AI ecosystem because model-level efficiency directly impacts deployment costs and scalability. Methods that slash computational demands while boosting throughput could accelerate adoption of advanced vision systems in data centers and edge devices. With visual understanding remaining central to modern AI workflows, WaveFormer shows how alternative mathematical frameworks can work alongside hardware improvements to shape the future of efficient vision model design.

Usman Salis

Usman Salis

Usman Salis

Usman Salis