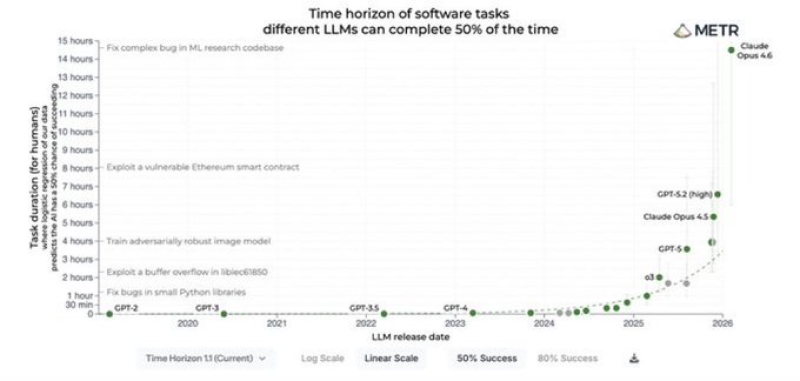

⬤ The narrative that AI progress is plateauing doesn't hold up to the numbers. As Matt Shumer recently pointed out, metrics tracking task completion across major model releases tell a very different story. A chart mapping model releases from GPT-2 and GPT-3 through to GPT-5 and Claude Opus 4.6 shows a clear upward curve - both in task complexity and the time horizon these systems can reliably handle.

⬤ Early models could manage simple, short tasks under an hour with modest reliability. Today's systems are tackling multi-hour challenges - think fixing bugs in machine learning codebases or exploiting vulnerabilities in complex smart contracts. That's not just better autocomplete. That's sustained technical work. AI Systems Are Learning to Act Not Just Predict captures this shift well: the new generation of models doesn't just generate text, it completes structured workflows with real outcomes.

Models can now complete economically valuable tasks - the kind of work that used to require a human developer sitting at a screen for hours.

⬤ That economic dimension matters. AI System Generates $10,000 in 7 Hours Through Real Work Tasks is a vivid example of where this capability is heading. Companies like AAPL and NVDA are already building on top of this trend - integrating AI into device ecosystems and high-performance compute platforms as the commercial case for action-oriented AI becomes impossible to ignore.

⬤ For the broader tech landscape, the implications are significant. As AI handles more complex tasks in tighter timeframes, the case for embedding these systems into enterprise workflows and software development pipelines gets stronger every quarter. The pace of capability growth is reshaping how companies benchmark technological leadership and decide where to put their AI infrastructure budgets.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi