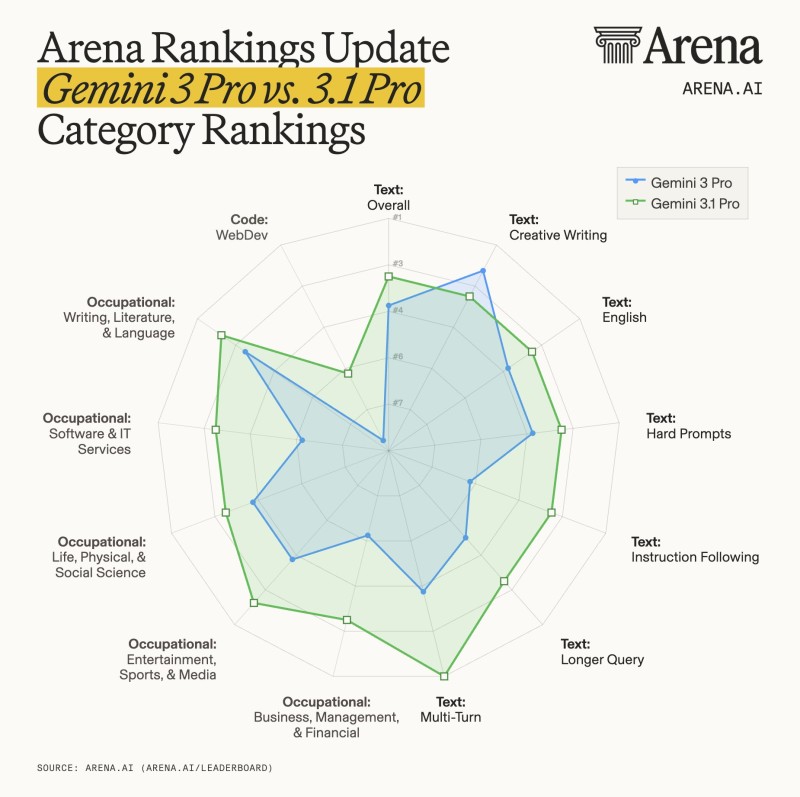

⬤ Alphabet (GOOGL) just got a visible performance boost from its latest model. Gemini 3.1 Pro outranks the previous Gemini 3 Pro by 13 points on the Arena.ai leaderboard, with improvements spread across text, code, and occupational use cases. As Arena.ai reported, the gains are broad-based, not limited to one category. Full details are available in the Gemini 3.1 Pro benchmark breakdown.

⬤ The Arena.ai update tells a clear story on the numbers side. Gemini 3.1 Pro moved up +5 ranks in Coding, +4 in Math, and +3 each in Expert, Instruction Following, and Multi-Turn categories compared to Gemini 3 Pro. More context is available in the Arena.ai rankscomparison.

Shifts like this can influence broader discussions around AI model capability and comparative standing.

⬤ Coding benchmarks show a +3 rank gain in Web Development specifically. On the occupational side, Gemini 3.1 Pro also moved up in Mathematical reasoning, Software & IT Services, and Business, Management & Financial Operations, rounding out a well-distributed improvement profile across the board.

⬤ A 13-point jump in a competitive AI leaderboard is not a small thing. It puts Gemini 3.1 Pro in a stronger position across reasoning, coding, and professional task categories, and it keeps Google in an active conversation about which models are actually pulling ahead in real-world performance benchmarks.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi