The AI industry just witnessed something different. Instead of another test measuring how well artificial intelligence can solve puzzles or answer questions, researchers introduced an AI that has to actually make money to survive. ClawWork is an open-source system that starts with $10 and must complete real work assignments while paying for its own operational costs.

How ClawWork's Economic Test Works

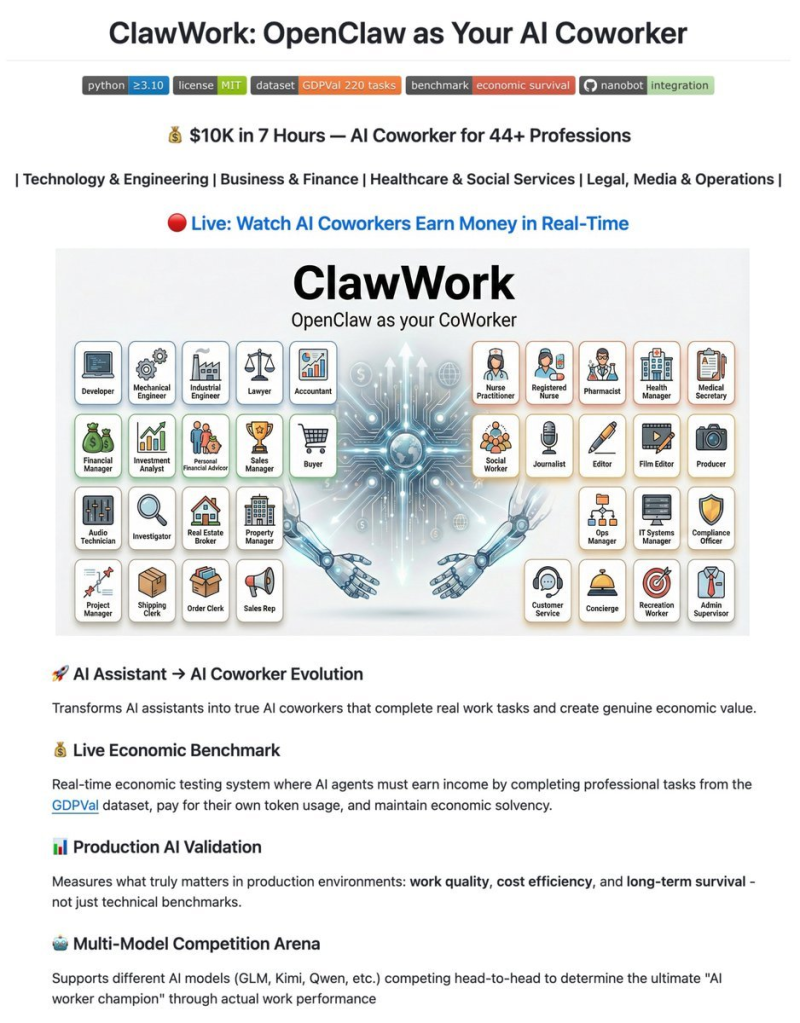

ClawWork tackles real professional work across 44 different careers and 220 specific tasks. We're talking about finance reports, healthcare documentation, legal analysis, and engineering projects - the kind of work actual professionals get paid for. The AI creates everything from scratch, and its work gets evaluated by GPT-5.2 using industry-specific grading standards, similar to methods outlined in the GPT-5.2 major reasoning benchmark.

Here's where it gets interesting: ClawWork earns money based on quality multiplied by estimated work hours and real wage data from the Bureau of Labor Statistics. But every API call it makes costs money from its balance. Poor quality work or inefficient processing directly hurts its bottom line.

Real-World Performance and Platform Integration

According to the test results shared in the ClawWork project overview, top-performing models reportedly earned over $1,500 per hour. The system doesn't just work in isolation either - it functions as a live coworker through Telegram, Discord, Slack, and WhatsApp. This reflects how AI tools are increasingly embedding themselves into everyday work platforms, though not without complications like the recent Microsoft Copilot WhatsApp exit, where platform policies and monetization issues led to integration changes.

Why This Benchmark Matters

Traditional AI tests focus on abstract reasoning and technical scores. ClawWork flips that approach by introducing market discipline - if the AI can't produce quality work efficiently, it literally runs out of money and stops functioning. This survival-based framework measures something companies actually care about: economic productivity under real constraints.

The entire project runs under an MIT license as fully open source, allowing researchers and developers to examine exactly how economic survival changes AI behavior. As businesses push harder to integrate AI into professional workflows, benchmarks that measure practical contribution rather than theoretical capability might become the new standard for evaluating advanced systems.

This experiment suggests we're moving past the era of pure performance metrics toward evaluating AI the same way we evaluate workers - can it deliver value while managing its resources effectively?

Usman Salis

Usman Salis

Usman Salis

Usman Salis