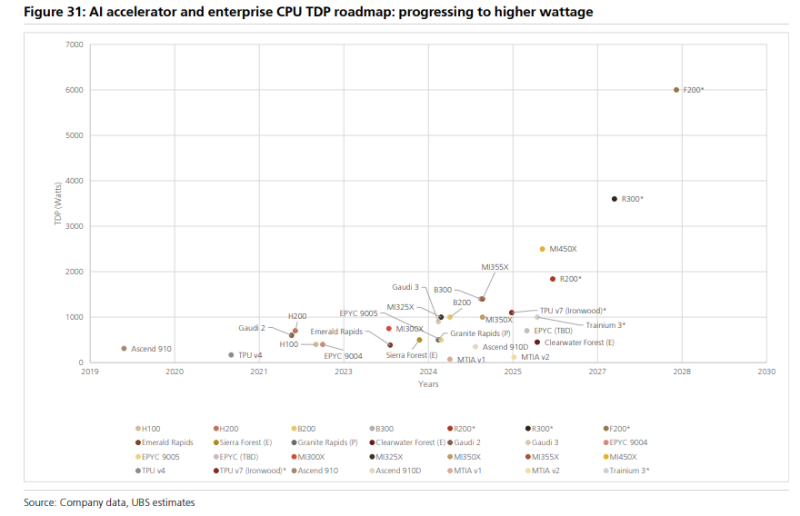

⬤ A recent UBS roadmap has sparked conversations about just how power-hungry AI chips are becoming. The chart tracks thermal design power across leading accelerators and enterprise CPUs through the end of the decade, and the trajectory is steep. The most eye-catching projection? The Feynman chip is expected to reach 6000W, a figure that raises real questions about cooling technology and whether current infrastructure can even support it.

⬤ Today's chips like Nvidia's H100 and H200 sit comfortably under 1000 watts. But devices rolling out between 2024 and 2026—including B200, Granite Rapids, and MI300X—push noticeably higher as performance demands grow. Later in the decade, platforms like R200, MI450X, and TPU v7 are projected to cross 2000 watts. Then there's Feynman, sitting alone at the far end of the curve near 6000 watts, representing a dramatic jump in compute density and heat output.

⬤ This isn't just about bigger numbers. The shift reflects how AI workloads are evolving—larger models, more complex inference, and specialized architectures that demand more power. For datacenter operators, higher TDP means rethinking everything: cooling systems, power delivery, physical server design. Solutions like immersion cooling and advanced liquid loops are gaining ground, but a multi-kilowatt chip would require engineering breakthroughs at both the silicon and facility level.

⬤ The bigger picture here is that power and thermal limits are becoming the defining constraint in AI hardware. If Feynman or similar chips actually hit 6000 watts, it would mark a turning point—not just technically, but economically. Infrastructure costs would shift, competitive dynamics would change, and future performance gains might depend more on thermal innovation than raw compute power. The roadmap makes one thing clear: watching how the industry handles heat will be just as important as tracking how it handles compute.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov