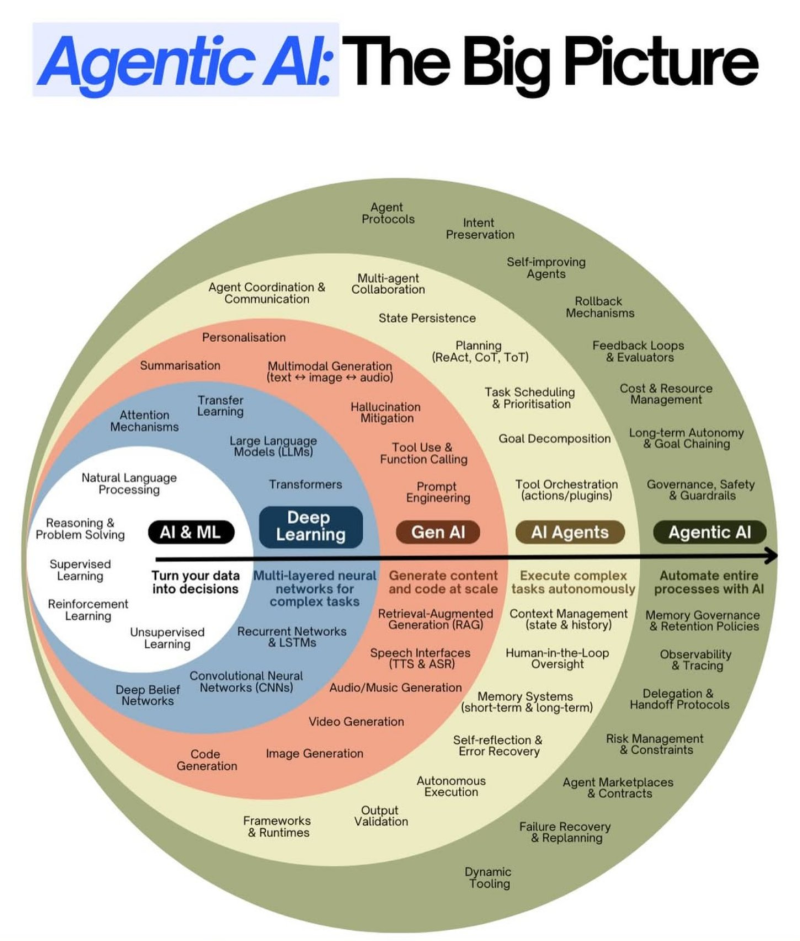

⬤ A widely shared framework is changing how developers understand agentic AI. The model shows that autonomy fails when systems lack proper architecture—not when models lack capability. True agentic AI requires production-grade controls like rollback mechanisms, cost limits, and inspectable decision logs, not just tool-calling LLMs.

⬤ The framework starts with machine learning and deep learning—systems that recognize patterns and make predictions from data. Generative AI sits above this, creating text, code, images, and video while handling search and speech tasks. These layers are powerful but passive: they generate outputs without taking independent action.

⬤ AI agents occupy the next level, converting model responses into executable steps. They plan workflows, use external tools, maintain context across interactions, and pause for human approval when needed. But agents alone don't guarantee autonomy. The final layer—agentic AI—adds the infrastructure that makes unattended operation possible: hard constraints on behavior, memory systems with user consent, audit trails, automated recovery after failures, and clear handoff protocols between agents.

⬤ This positioning treats autonomy as an engineering challenge rather than a model upgrade. Systems without governance, inspection tools, or failure recovery don't qualify as truly agentic—no matter how advanced the underlying LLM. The framework reflects growing industry consensus that reliability and operational safety matter as much as raw capability when deploying autonomous AI at scale.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov