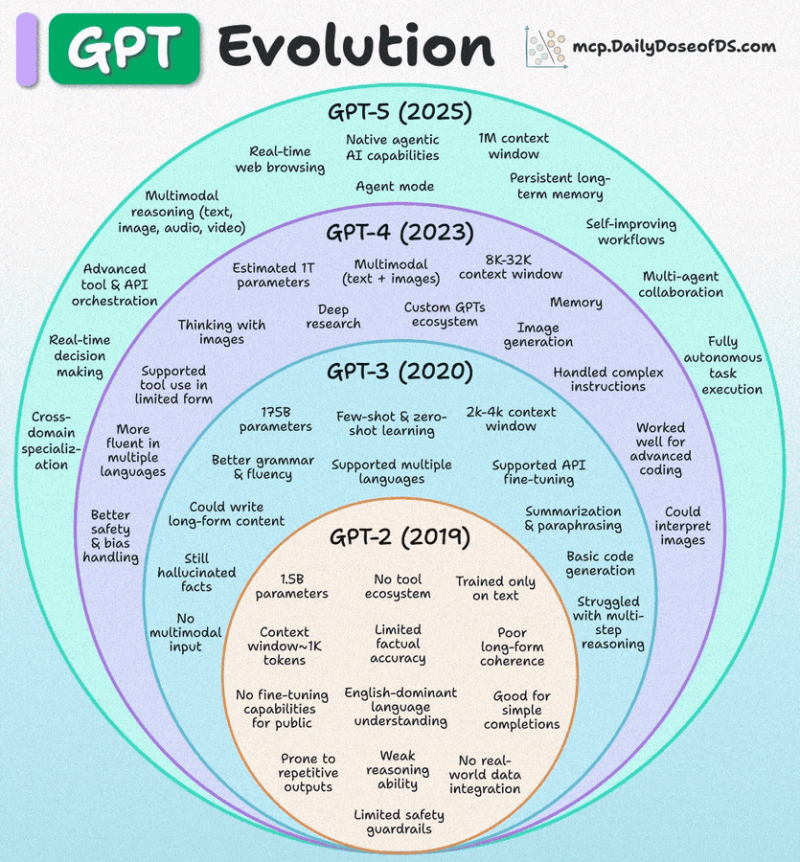

⬤ A new visual breakdown shows how GPT models advanced from GPT-2 in 2019 to GPT-5 in 2025, mapping out six years of progress in AI capabilities. The chart compares each generation side by side, tracking improvements in context size, multimodal features, reasoning depth, and autonomous operation.

⬤ Back in 2019, GPT-2 handled only text with a tiny context window of about 1,000 tokens. It had basic accuracy and minimal safety features. By 2020, GPT-3 made a huge jump in scale, bringing smoother language handling, multilingual support, and the ability to learn from just a few examples. It also added summarization, paraphrasing, and early API fine-tuning options.

⬤ GPT-4 arrived in 2023 as a breakthrough release. It could process both text and images, and expanded context windows from 8,000 to 32,000 tokens. The model got better at following complex instructions, conducting research, generating images, and remembering past interactions. It also kicked off a wave of custom GPTs and gave users access to more tools than ever before.

⬤ GPT-5 in 2025 takes things even further with built-in agentic AI, live web browsing, long-term memory that sticks around, and a massive context window reaching up to 1 million tokens. It now supports multi-agent teamwork, self-improving workflows, and fully autonomous task handling. This evolution shows how GPT went from simple text generation to advanced AI systems that can reason, coordinate, and make real-time decisions across countless scenarios.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov