⬤ InternLM rolled out ARM-Thinker, a fresh agentic reward model framework built to make multimodal AI systems more reliable and accurate. The system lets models follow a "Think–Act–Verify" process, tapping into tools like image cropping and document retrieval before locking in answers. The launch signals growing momentum around architectures that level up reasoning quality in advanced vision–language work.

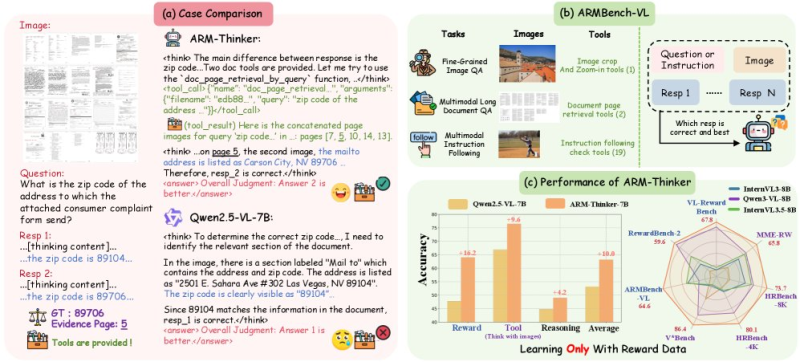

⬤ The framework runs through an iterative cycle where the model chunks down tasks, pulls supporting evidence, and double-checks intermediate results. ARM-Thinker's edge shows up clearly in side-by-side tests—it nails a ZIP code from messy document pages by firing up retrieval tools, while baseline models miss the mark. ARMbench-VL evaluation data reveals stronger performance across fine-grained image QA, long-document queries, and multimodal instruction tasks. The numbers show several-point accuracy bumps over Qwen2.5-VL-7B, especially in reward-driven and tool-assisted reasoning scenarios.

⬤ Performance charts spotlight ARM-Thinker's wins in reward alignment, tool usage, and structured reasoning. Radar plots comparing multiple models show how ARM-Thinker pulls ahead in ARMbench scores and other multimodal benchmarks, backing InternLM's claim that weaving tools directly into the reasoning loop cuts down hallucinations. Retrieval-based verification—instead of leaning only on implicit model inference—delivers more grounded outputs and steadier answers across complex instructions and document-heavy queries.

⬤ ARM-Thinker reflects a wider move toward agentic, verifiable AI systems ready for increasingly demanding multimodal work. As companies deploy AI for tasks needing reliability, traceability, and detailed reasoning, frameworks packing structured tool use and reward-trained behavior are set to gain traction. InternLM's advances show how innovation in model workflows and training strategies keeps reshaping the competitive AI landscape.

Peter Smith

Peter Smith

Peter Smith

Peter Smith