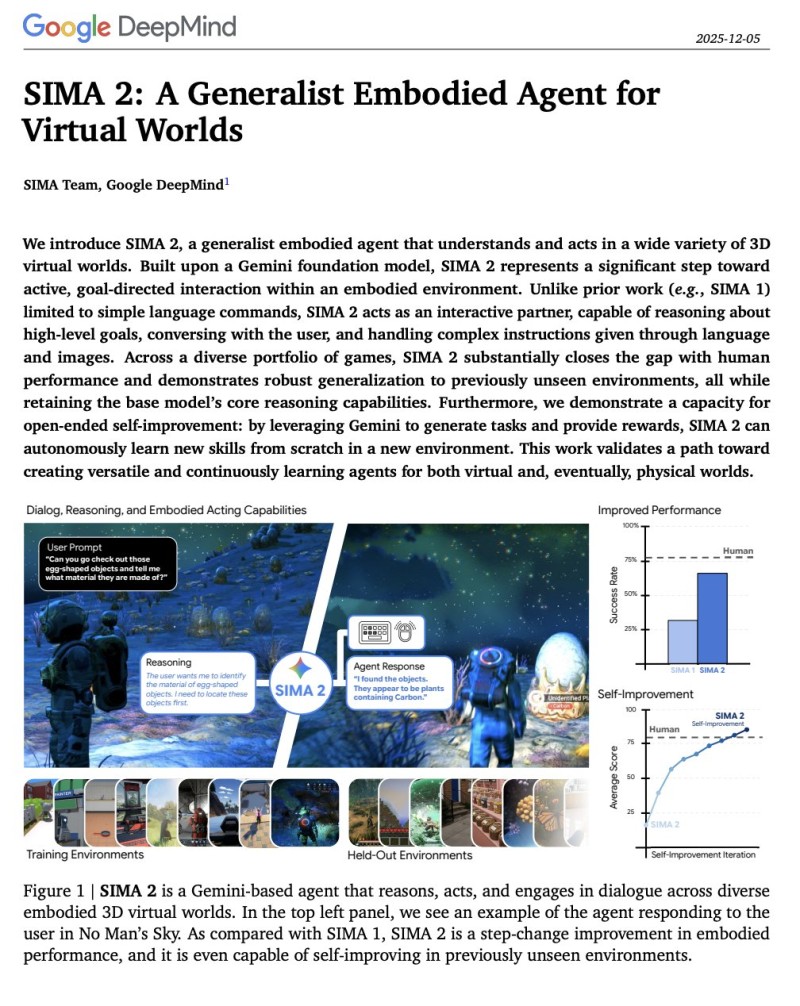

⬤ Google DeepMind rolled out SIMA 2, their next-gen embodied AI system built to work across all kinds of virtual 3D environments. Running on the Gemini foundation model, SIMA 2 understands language, reads images, handles goals, and takes action inside complex interactive worlds. While SIMA 1 mostly followed pre-set commands, SIMA 2 actually reasons things out, holds conversations, navigates spaces, and explores game environments independently.

⬤ The new system shows a major jump in how well it performs and adapts. In games like No Man's Sky, the agent reads what users ask for, spots objects, figures out what they do, and responds with actions that make sense in context. Performance data reveals SIMA 2 hitting much higher success rates than SIMA 1, nearly matching human performance while staying consistent across both familiar training environments and completely new ones.

⬤ What really stands out is SIMA 2's ability to teach itself. The agent creates its own tasks, sets rewards, and gets better through trial and error in unfamiliar settings. Performance tracking shows its average score climbing steadily during repeated self-improvement rounds. This means SIMA 2 picks up new skills from zero without needing extra human input or guidance—a genuine breakthrough for general-purpose embodied AI.

⬤ SIMA 2's launch shows Google DeepMind doubling down on building smarter, more adaptable AI systems that go beyond basic language tasks. With better reasoning, stronger generalization, and genuine autonomous learning, SIMA 2 points toward AI agents that can function in virtual spaces now and real-world applications down the line. These advances could shape future development across gaming, simulation, robotics, and interactive digital platforms as embodied AI keeps evolving alongside large multimodal models.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah