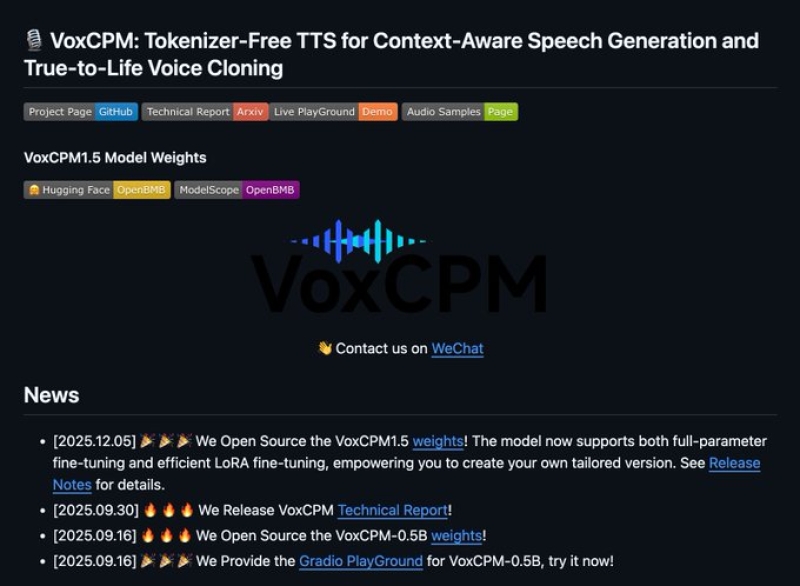

⬤ OpenBMB just open sourced VoxCPM model weights, bringing a fresh take on text-to-speech by ditching tokenization entirely. The model handles real-time streaming voice cloning and LoRA fine-tuning, creating high-quality speech straight from continuous acoustic representations. Rather than breaking audio down into discrete tokens, VoxCPM generates speech in continuous form to keep natural prosody, pacing, and emotional expression intact.

⬤ VoxCPM runs on an end-to-end diffusion autoregressive architecture that skips phoneme and codec token bottlenecks completely. Cutting out these middle steps eliminates common glitches you'd typically hear in token-based TTS models. The system also does zero-shot voice cloning, meaning it captures a speaker's accent, tone, rhythm, and timing from just a few seconds of reference audio without needing any speaker-specific training.

⬤ The model achieves roughly 0.15 real-time factor on a single NVIDIA RTX 4090, delivering near real-time speech synthesis. Streaming inference processes audio chunk by chunk with sub-second latency, making it practical for live applications. LoRA fine-tuning lets users customize voices or styles efficiently without retraining the entire model, cutting down both computational costs and deployment headaches.

⬤ This release matters because it offers a scalable, open-source alternative to traditional token-based text-to-speech systems. Tokenizer-free models like VoxCPM could bring more realism and responsiveness to conversational AI, content creation, accessibility tools, and real-time communication platforms. With open access, fast inference, and flexible fine-tuning combined, VoxCPM represents where speech generation is heading—more natural and more efficient.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi