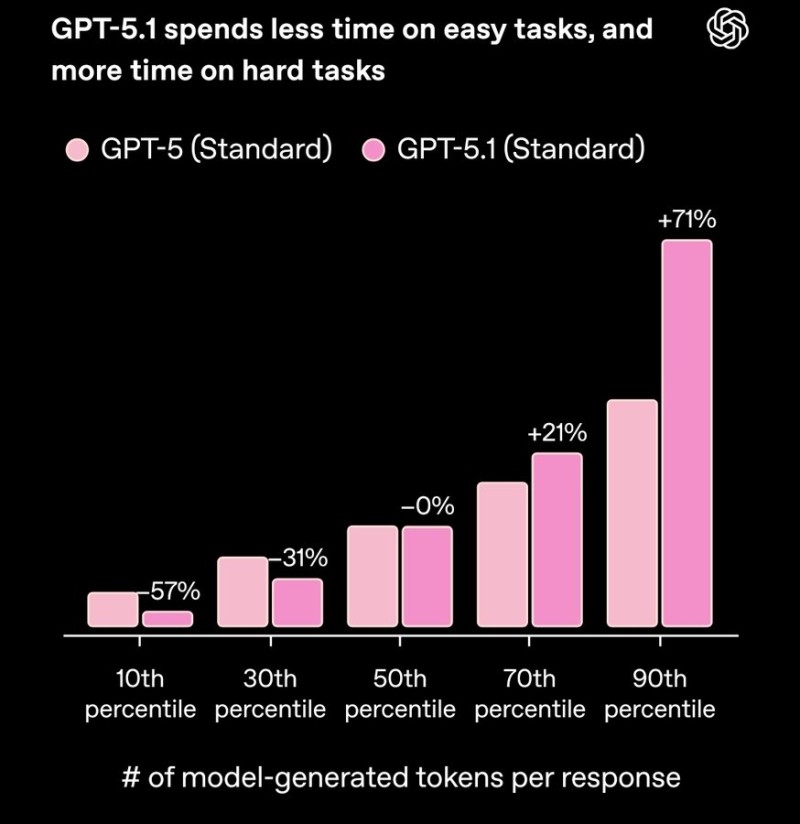

⬤ OpenAI released a chart showing how GPT-5.1 allocates computational effort differently than GPT-5. The key message: GPT-5.1 "spends less time on easy tasks, and more time on hard tasks," measured by the number of tokens the model generates per response.

⬤ The chart breaks down results by difficulty percentiles. At the 10th percentile (easier prompts), GPT-5.1 uses 57% fewer tokens than GPT-5. At the 30th percentile, it's down 31%, and at the 50th percentile the models are even. Then the pattern flips: at the 70th percentile, GPT-5.1 uses 21% more tokens, and at the 90th percentile it cranks out 71% more tokens on the toughest prompts.

⬤ The shift is being called "huge"—GPT-5.1 cuts token usage dramatically on simple questions while throwing significantly more computational power at complex problems. If this behavior holds up in real-world use, it could mean better answers on hard tasks without wasting resources on easy ones.

⬤ For anyone watching AI development, the implication is clear: GPT-5.1 appears designed to be smarter about where it focuses effort. That could reshape expectations around both efficiency and performance—two factors that matter a lot when it comes to cost and quality across the AI industry.

Peter Smith

Peter Smith

Peter Smith

Peter Smith