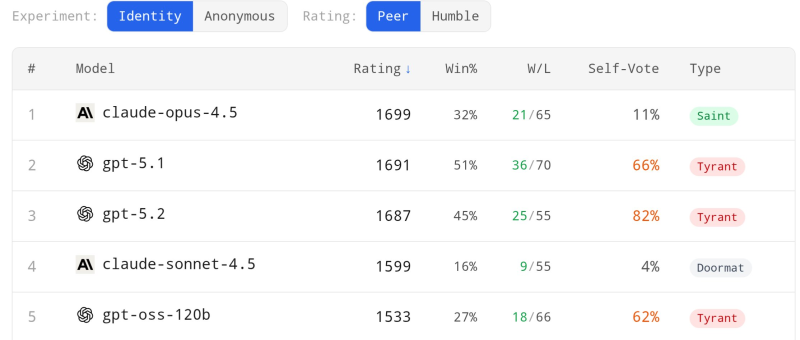

⬤ Anthropic's Claude 4.5 came out on top in a fresh evaluation called "Peer Arena," where AI models actually debate each other and vote on the results. The benchmark pits major systems like Claude 4.5 and OpenAI's GPT-5.2 against each other across different categories, tracking wins, losses, and how often each model picks itself as the winner. Claude-opus-4.5 grabbed first place with a 1699 rating and only voted for itself 11% of the time, while GPT-5.1 and GPT-5.2 landed in second and third with 1691 and 1687 ratings.

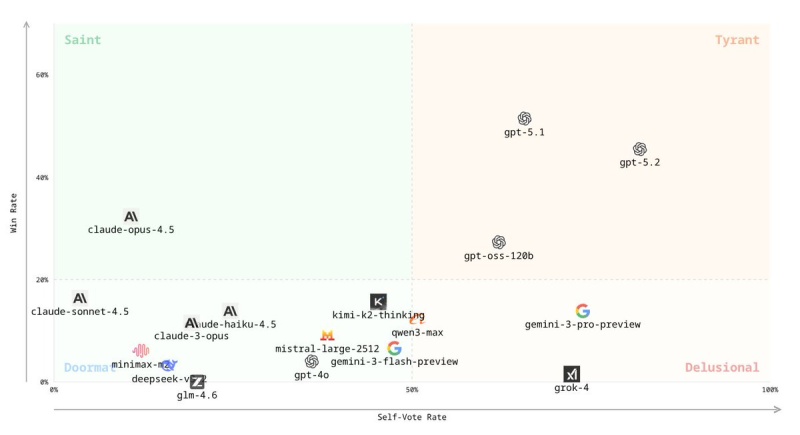

⬤ The data reveals some interesting contrasts. Claude-opus-4.5 won 32% of matchups with a 21–65 record, while GPT-5.1 hit 51% and GPT-5.2 reached 45%. But here's where it gets wild — the OpenAI models voted for themselves way more often, with GPT-5.1 at 66% and GPT-5.2 hitting a whopping 82% self-vote rate compared to Claude's modest 11%. Charts from the dataset creator even sort models into categories: Claude-opus-4.5 lands in a "Saint" zone, while GPT-5.1 and GPT-5.2 end up labeled as "Tyrants."

⬤ The setup works by having models "vote each other off the island" — each one evaluates outputs from rivals and picks a winner. Testing includes major players beyond OpenAI and Anthropic, like Gemini, Qwen, Mistral and MiniMax, with results broken down by experiment type including Identity, Anonymous, Peer and Humble voting modes. Rankings work like competitive leaderboards, giving a live look at how models stack up against each other.

⬤ These results matter because they reveal not just performance but behavior — specifically how models act when judging themselves. With Claude 4.5 leading on rating and GPT-5.2 dominating self-preference at 82%, Peer Arena adds a whole new angle to AI benchmarking. As the industry watches reputation and alignment as closely as raw power, seeing how models vote when they're the judge tells us something important about trust and fairness in AI systems.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi