⬤ Alibaba has unveiled the Qwen3.5-397B-A17B language model, marking a significant milestone as the first open-weight release in its Qwen3.5 series. As Qwen announced, this new model is specifically built for real-world agent applications and comes with native multimodal support right out of the box.

⬤ What makes this release particularly interesting is the architecture under the hood. The model combines hybrid linear attention with a sparse mixture-of-experts design, all trained using large-scale reinforcement learning. The performance gains are impressive—Qwen3.5-397B-A17B delivers between 8.6× and 19.0× faster decoding throughput compared to Qwen3-Max. Plus, it supports an extensive range of 201 languages and dialects, making it genuinely global in scope.

Real-world deployment efficiency in mind, balancing performance with practical throughput demands.

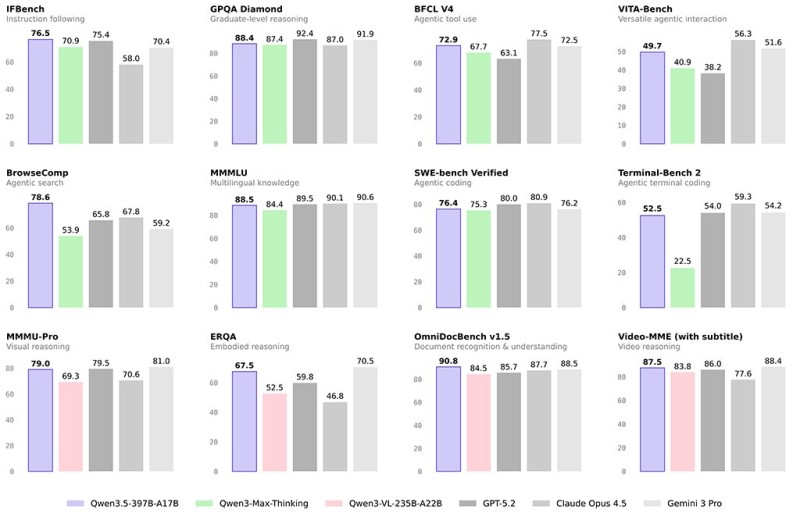

⬤ Alibaba's benchmark testing pits Qwen models against heavyweights like GPT-5.2, Claude Opus 4.5, and Gemini 3 Pro across multiple evaluation categories. The tests cover instruction following (IFBench), reasoning (GPQA Diamond), agentic coding (BFCL V4), terminal coding, multilingual knowledge (MMLU), multimodal understanding (MMMU-Pro), document recognition (OmniDocBench), and video reasoning (Video-MME). The comparative chart shows how Qwen stacks up across these different dimensions.

⬤ Perhaps the most developer-friendly aspect of this release is the Apache-2.0 license. This open-weight approach means developers can freely access, modify, and integrate the model into their own applications without restrictive licensing barriers. It's a notable move in an increasingly competitive landscape where AI providers are balancing performance with accessibility.

⬤ This release reflects the broader industry trend toward making powerful multimodal AI systems more widely available. By opening up access to a model of this scale, Alibaba is positioning itself as a serious contender in the race for developer adoption and real-world deployment flexibility.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah