⬤ Google's Gemini 3 Pro Preview and Anthropic's Claude Opus 4.5 are dominating AI performance benchmarks in frontend development, based on the latest Design Arena rankings. Both models are outpacing competitors in interface design and user-facing development tasks, showing clear advantages in creative workflows.

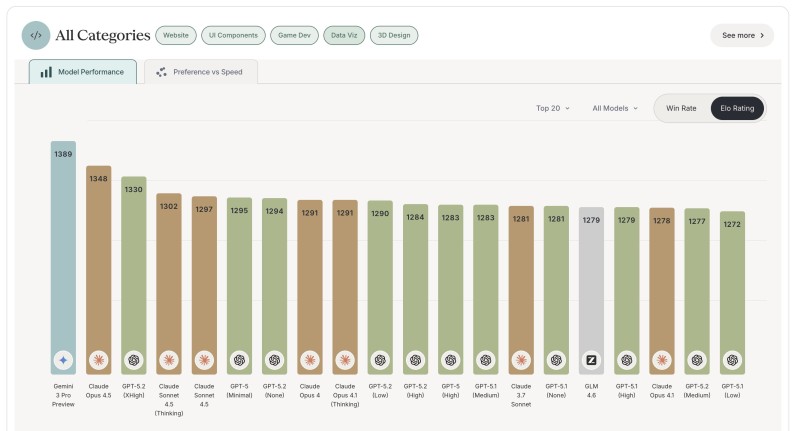

⬤ Current rankings place Gemini 3 Pro Preview at the top with the highest overall Elo rating. Claude Opus 4.5 sits in second place, while OpenAI's newly released GPT-5.2 (xHigh) claims third. The tight Elo score clustering among top models signals fierce competition, with several systems now delivering similar performance across technical and creative domains.

⬤ GPT-5.2 (xHigh) is showing particular strength in specialized areas—ranking first in Game Development Arena and landing top-three spots in both Website and Data Visualization categories. These results highlight the model's versatility, combining visual quality with structured reasoning. The improvements come from significant engineering work focused on refined layouts, consistent styling, and polished final outputs.

⬤ The rankings reveal that AI leadership is now splitting by use case rather than being dominated by one system. Gemini 3 Pro Preview excels in frontend tasks, Claude Opus 4.5 maintains consistent high performance, and GPT-5.2 demonstrates strong multi-category capabilities. For teams evaluating models for design, development, and visualization work, these benchmarks provide clear guidance on which systems currently deliver production-quality results.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah